The future of AI compute is photonics… or is it?

Exploring the potential of photonics to improve compute efficiency

Rapid advances in the digital economy and AI have been driving an ever-increasing need for compute, with an associated impact on energy consumption; keeping pace with this demand long-term is likely to require a fundamental step-change in compute efficiency (performance/Watt).

Previous articles have explored novel computing paradigms including neuromorphic, in-memory compute (IMC), wave-based analog computing and quantum computing. This article identifies some others which could also prove transformative over the coming years.

In digital logic, when a transistor switches, the previous state is lost, and this process of information deletion transforms into heat according to the laws of thermodynamics and information theory.

Reversible computing, a concept dating back to the 1960s, sets out to remove this intrinsic energy loss through the development of reversible transistors that can revert back to their previous state.

Designing circuits that allow currents to flow both forwards and backwards through the logic gates lessens wear and tear on the transistors thereby increasing chip lifespan as well as reducing power and cooling reqmts.

On the downside, such an approach requires running the chips slower than normal, but the resulting lower performance can be offset by packing chips more densely and stacking in 3D to produce higher performance per unit volume with dramatically lower energy use – in theory, near-zero energy computing.

Implementing this concept in practise is far from trivial, but is now actively being pursued by companies such as Vaire Computing.

Rather than coding information as 1s and 0s, temporal logic (aka race logic) encodes information as the time it takes for a signal to propagate through the system.

This is similar in some ways to the spiking neural networks (SNNs) discussed within the neuromorphic article, but rather than encoding signals in the number or rate of spikes, temporal computing encodes the time at which the 0 → 1 transition occurs.

Based on four race logic operations, with at most one bit-flip per operation, this enables lower switching activity, higher throughput, and orders-of-magnitude improvement in energy efficiency, albeit sacrificing precision compared to traditional binary representation.

Temporal computing works well for accelerating AI decision-tree classifiers used for categorising images, and is a natural fit for in-sensor processing (image, sound, time-of-flight (ToF) cameras etc.).

It’s less suitable though for generic artificial neural networks (ANNs) due to its inability to perform arithmetic operations like multiplication and addition, although this can potentially be overcome by combining temporal data with pulse rate encoding.

In comparison to the electrons used in digital logic, photons are virtually frictionless so able to travel faster (supporting higher bandwidths & lower latency) whilst also consuming much less energy.

This means that matrix multiplication for AI could in theory be performed passively at the ‘speed of light’, and be able to achieve orders of magnitude lower energy consumption by only using one photon per operation.

Source: photonics.com

In practise though, there are a number of challenges faced by photonic computing:

Photonic computing though still has a lot of promise, and especially so if the optical/electrical conversion process can be sidestepped through the design of an all-optical computer. Akhetonics and others are pursuing such a goal.

For more discussion on photonics and photonic chip (PIC) design, see this previous article.

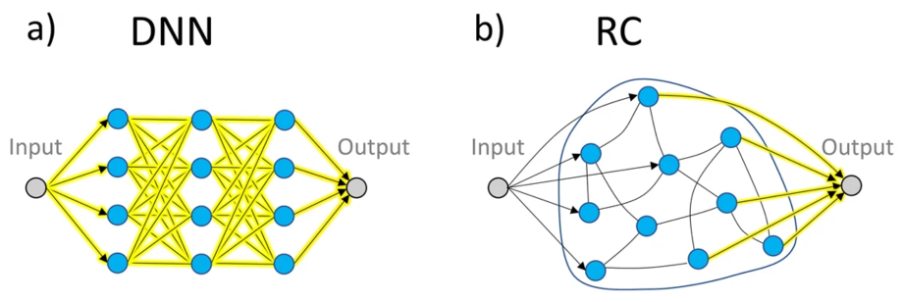

Within the broader field of neuromorphics, reservoir computing (RC) is a relatively new concept that explores ways of developing small, lightweight AI/ML models that are capable of fast inference but also fast adaptation.

Originally conceived in the early 2020s, it follows a similar architecture to ANNs but with a few notable differences. In an ANN, all the parameters are fully trainable whilst in RC the input (sensing) and middle (processing) layers are fixed and it focuses instead on only training the output layer, similar to how many biological neuronal systems work.

Source: Connecting reservoir computing with statistical forecasting and deep neural networks

The small parameter size and simpler training procedure of reservoir computing leads to reduced training time and resource consumption making it ideal in many industry-level signal processing and learning tasks, such as real-time speech recognition and active noise control amongst others.

It also achieves a surprisingly high level of prediction accuracy in systems that exhibit strong nonlinearity and chaotic behaviour such as in weather forecasting.

Another interesting area being explored is its use within future 6G mobile networks to dynamically model and optimise channel capacity, overturning the traditional passive waveform design and channel coding approach.

For many of the methods discussed in this and previous articles, the concepts actually date back several decades, but due to implementation challenges, and the onward march of conventional digital logic, have remained largely an academic curiosity. With Moore’s Law slowing down, corporates and investors alike are now showing renewed interest in these novel alternatives.

They all show promise in achieving the important step-change needed in compute efficiency (performance/Watt); however, winning widespread commercial adoption will require them to also integrate within established ecosystems.

First and foremost, implementation is still likely to be a challenge, with such capabilities needing to be interfaced with host machines, digital logic, analog/digital conversion and an array of sensors and actuators for use in real-world applications.

In many cases they also take a fundamentally different approach to programming, so achieving adoption will require tools to be provided that can abstract away the underlying idiosyncrasies, and enable these technologies to be easily accessible by existing developer communities, but without diluting any of their unique compute efficiency benefits.

These technologies are unlikely to replace existing xPU compute, at least not in the short-term, but do show real advantages in compute efficiency when applied to specific tasks.

In particular, they’re likely to be indispensable for deploying advanced AI models and computer vision on low-power devices in applications such as autonomous systems (vehicles and drones), robotics, remote sensing, wearable technology, smartphones, embedded systems, and IoT devices.

Interacting with dynamic and often unpredictable real-world environments remains challenging for modern robots, but is exactly the kind of task that biological brains have evolved to solve, and hence is one of the most promising application areas for technologies such as neuromorphics.

For signal processing, a hybrid solution might provide the best of both worlds: analog for approximate computations achieved with high speed using little energy, and digital for programming, storage, and computation with high precision, perhaps using an analog result as a very good initial guess to reduce the problem space. Such hybrid approaches can deliver speedups of 10x whilst also delivering energy savings of a similar magnitude.

And perhaps, with the renewed interest in voice interaction (e.g., ChatGPT’s Advanced Voice Mode; Google’s NotebookLM Audio Overview feature), these technologies could enable voice capabilities to be moved to the edge rather than depend on LLMs in the cloud which may be impractical in situations with poor connectivity, suboptimal in terms of latency, or simply undesirable in the case of sensitive personal or company confidential information.

The future of compute is likely to be heterogeneous, and hence there are a multitude of areas in which startups will be able to innovate and find their niche.

Exploring the potential of photonics to improve compute efficiency

Looking beyond NISQ to map out the future timelines for quantum computing