The future of AI compute is photonics… or is it?

Exploring the potential of photonics to improve compute efficiency

Leveraging the principles of quantum mechanics to establish a new paradigm in computing was first hypothesised four or so decades ago; steady progress has been made since, but it’s really only in the past few years that interest and investment has ramped up, with quantum computing now widely regarded as a cornerstone in the future of compute.

Core to its promise is the introduction of qubits that can represent multiple states simultaneously (superposition) thereby enabling complex problems to be computed in parallel rather than sequentially.

This capability makes it particularly good at solving quadratic optimisation problems in industries such as logistics, finance, and supply chain management. Quantum computers are also intrinsically suited to simulating quantum systems; for instance in drug discovery, where molecular interactions are hard to simulate with classical computers.

Quantum compute power increases exponentially in proportion to the number of qubits available, hence being able to scale the architecture is essential to achieving quantum’s commercial potential.

Simply adding more physical qubits might seem the obvious answer, but results in an escalating error rate due to decoherence, noise, and imperfect operations that limits the current NISQ computers to between 100 to 1,000 reliable quantum operations (QuOps).

Whilst interesting from a scientific perspective, the commercial value of such a capability is limited. To realise its promise, and go beyond the reach of any classical supercomputer (quantum advantage), quantum computing must deliver a million or more QuOps.

Achieving this requires a pivot away from NISQ principles to focus instead on delivering fault-tolerance.

Quantum error correction (QEC) will be key, encoding information redundantly across multiple physical qubits and using sophisticated parity checks to identify and correct for errors; in essence, combining multiple physical qubits to create a logical qubit, an approach validated by research conducted by Google earlier this year and more recently incorporated into their latest quantum chip, Willow.

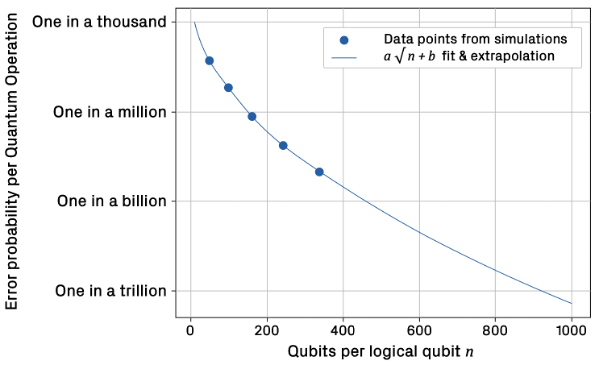

Provided the physical qubit error rate can be maintained below a critical threshold, every doubling of physical qubits used to generate a logical qubit results in a halving of the overall error rate.

This approach of combining physical qubits to generate logical qubits can then be scaled up to arrange the logical qubits into quantum gates, circuits, and ultimately a quantum computer capable of reaching the targeted million QuOps (a MegaQuOps).

Doing so is likely to require around 50-100 logical qubits, with each logical qubit requiring ~161 physical qubits assuming a 99.9% qubit fidelity – state of the art right now is ~24 logical qubits with Microsoft and Atom Computing looking to commercially launch such a capability next year.

As quantum computers scale further to provide sufficient logical qubits to support trillions of quantum operations, the number of physical qubits per logical qubit also increases (as shown below) and QEC becomes ever more computationally intensive, ironically requiring thousands of classical xPUs to rapidly process the vast amount of syndrome data needed to identify the location and type of each error that needs correcting.

Source: The Quantum Error Correction Report 2024, Riverlane

In short, there’s still a long way to go to reach the million QuOps target, and further still for quantum to deliver on some of the use cases that have driven much of the interest and speculation in quantum computing.

To give an example, it’s estimated that 13 trillion QuOps might be needed to break the RSA encryption used on the Internet (assuming 2048-bit keys), and in doing so realise the so-called quantum threat. This would require error rates to be as low as one in a trillion, far below the one in a thousand achieved in today’s implementations, and might require QEC running at 100TB/s, equivalent to processing Netflix’s total global streaming data every second.

The challenge seems daunting, but steady progress is being made, with the UK Government targeting a million QuOps (MegaQuOp) by 2028, a billion QuOps (GigaQuOp) by 2032 and a trillion (TeraQuOp) by 2035. Others are forecasting similar progress, and see early fault-tolerant quantum computers (FTQC) becoming available within the next five years with MegaQuOp to GigaQuOp capabilities.

In the meantime, rapid advancements in (Gen)AI and massive GPU clusters have enabled AI to encroach on some of the complex problem areas previously thought of as the sole realm of quantum computing – physics, chemistry, materials science, and drug discovery (e.g., Google DeepMind’s AlphaFold, and AlphaProteo) – and caused some to raise doubts about the future role of quantum computing.

In truth though, AI and quantum are not mutually exclusive and are likely to be employed synergistically together as evidenced by Google DeepMind’s AlphaQubit, an AI system that dramatically improves the ability to detect and correct errors in quantum computers.

Longer term, and assuming it can hit its TeraQuOp target, quantum computing may indeed deliver on its promise. And if it can reach ~285 TeraQuOps, quantum may be able to simulate the FeMoCo molecule, improve ammonia production, and in doing so address food scarcity and global warming.

Exploring the potential of photonics to improve compute efficiency

Exploring more esoteric approaches to the future of compute