The future of AI compute is photonics… or is it?

Exploring the potential of photonics to improve efficiency in the future of compute

The space industry has seen rapid change over the past decade with increased involvement from the private sector dramatically reducing costs through innovation, and democratising access for new commercial customers and market entrants – the New Space era.

Telecoms in particular has seen recent growth with the likes of Starlink, Kuiper, Globalstar, Iridium and others all vying to offer a mix of telecoms, messaging and internet services either to dedicated IoT devices and consumer equipment in the home or direct to mobile phones through 5G non-terrestrial networks (NTN).

Earth Observation (EO) is also going through a period of transformation. Whilst applications such as weather forecasting, precision farming and environmental monitoring have traditionally involved data being sent back to Earth for processing, the latest EO satellites and hyperspectral sensors gather far more data than can be quickly downlinked and hence there is a movement towards processing the data in orbit, and only relaying the most important and valuable insights back to Earth; for instance, using AI to spot ships and downlink their locations, sizes and headings rather than transferring enormous logs of ocean data.

Both applications are dependent on higher levels of compute to move signal processing up into the satellites, and rapid development and launch cycles to gain competitive advantage whilst delivering on a constrained budget shaped by commercial opportunity rather than government funding.

The space environment though is harsh, with exposure to ionising radiation degrading satellite electronics over time, and high-energy particles from Galactic Cosmic Rays (GCR) and solar flares striking the satellite causing single event effects (SEE) that can ‘upset’ circuits and potentially lead to catastrophic failure.

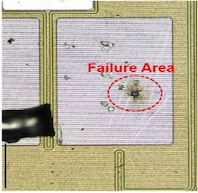

The cumulative damage is known as the total ionising dose (TID) and has the effect of gradually shifting semiconductor gate biasing over time to a point where the transistors remain permanently open (or closed) leading to component failure.

In addition, the high-energy particles cause ‘soft errors’ such as a glitch in output or a bit flip in memory which corrupts data, potentially causing a crash that requires a system reboot; worst case, they can cause a destructive latch-up and burnout due to overcurrent.

The preferred method for reducing these risks is to use components that have been radiation-hardened (‘rad-hard’).

Historically this involved using exotic process technologies such as silicon carbide or gallium nitride to provide high levels of immunity, but such an approach results in slower development time and significantly higher cost that can’t be justified beyond mission-critical and deep-space missions.

Radiation-Hardening By Design (RHBD) is now more common for developing space-grade components and involves a number of steps, such as changes in the substrate for chip fabrication to lower manufacturing costs, switching from ceramic to plastic packaging to reduce size and cost, and specific design & layout changes to improve tolerance to radiation. One such design change is the adoption of triple modular redundancy (TMR) in which mission critical data is stored and processed in three separate locations on the chip, with the output only being used if two or more responses agree.

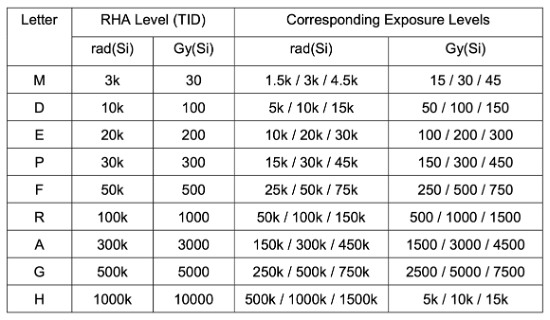

These measures in combination can provide protection against radiation dose levels (TID) in the 100-300 krad range – not as high as for fully rad-hard mission-critical components (1000 krad+), but more than sufficient for enabling multi-year operation with manageable risk, and at lower cost.

ESCC radiation hardness assurance (RHA) qualification levels

Having said that, they still cost much more than their commercial-off-the-shelf (COTS) equivalents due to larger die sizes, numerous design & test cycles, and smaller manufacturing volumes. RHBD components also tend to shy away from employing the latest semiconductor technology (smaller CMOS feature sizes, higher clock rates etc.) in order to maximise radiation tolerance, but in doing so sacrifice compute performance and power efficiency.

For today’s booming space industry, it will be challenging for space-grade components alone to keep pace with the required speed of innovation, high levels of compute, and constrained budgets driven by many New Space applications.

The challenge therefore is to design systems using COTS components that are sufficiently radiation-tolerant for the intended satellite lifespan, yet still able to meet the performance and cost goals. But this is far from easy, and the march to ever smaller process nodes (e.g., <7nm) to increase compute performance and lower power consumption are making COTS components increasingly vulnerable to the effects of radiation.

Over the past decade, the New Space industry has experimented with numerous approaches, but in essence the path most commonly taken to achieve the desired level of radiation tolerance for small satellites is through a combination of upscreening COTS components, dedicated shielding, and novel system design.

Upscreening

The radiation-tolerance of COTS components varies based on their internal design & process node, intended usage, and variations and imperfections in the chip-manufacturing process.

Typically withstanding radiation dose levels (TID) in the 5-10 krad range before malfunction, some components, such as those designed for automotive or medical applications and manufactured using larger process nodes (e.g., >28nm), may be tolerant up to 30-50 krad or even higher whilst also being better able at coping with the vibration, shock and temperature variations of space.

A process of upscreening can therefore be used to select components with better intrinsic radiation tolerance, and then filter out those with the highest tolerances within a batch.

Whilst this addresses the degradation over time due to ionising radiation, the components will still be vulnerable to single event effects (SEE) caused by high-energy particles, and even more so if they are based on smaller process nodes and pack billions of transistors into each chip.

Shielding

Shielding would seem the obvious answer, and can reduce the radiation dose levels whilst protecting to some extent against the particles that cause SEE soft errors.

Typically achieved using aluminium, shield performance can be improved by increasing its thickness and/or coating with a high-density metal such as tantalum. However, whilst aluminium shielding is generally effective, it’s less useful with some high-energy particles that can scatter on impact and shower the component with more radiation.

The industry has more recently been experimenting with novel materials, one example being the 3D-printed nanocomposite metal material pioneered by Cosmic Shielding Corporation that is capable of reducing the radiation dose rate by 60-70% whilst also being more effective at limiting SEE.

Concept image of a custom plasteel shielding solution for a space-based computer (CSC)

System design

Careful component selection and shielding can go a long way towards achieving radiation tolerance, but equally important is to design in a number of fault management mechanisms within the overall system to cope with the different types of soft error.

Redundancy

Similar to TMR, triple redundancy can be employed at the system level to spot and correct processing errors, and shut down a faulty board where needed. SpaceX follows such an approach, using triple-redundancy within its Merlin engine computers, with each constantly checking on the others as part of a fault-tolerant design.

System reset

More widely within the system, background analysis and watchdog timers can be used to spot when a part of the system has hung or is acting erroneously and reboot accordingly to (hopefully) clear the error.

Error correction

To mitigate the risk of data becoming corrupted in memory due to a single event upset (SEU), “scrubber” circuits utilising error-detection and correction codes (EDAC) can be used to continuously sweep the memory, checking for errors and writing back any corrections.

Latch-up protection

Latch-ups are a particular issue and can result in a component malfunctioning or even being permanently damaged due to overcurrent, but again can be mitigated to some extent through the inclusion of latch-up protection circuitry within the system to monitor and reset the power when a latch-up is detected.

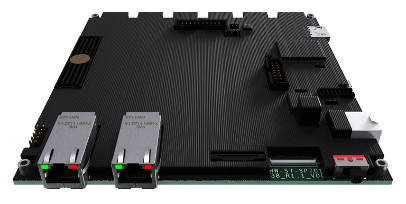

This is a vibrant area for innovation within the New Space industry with a number of companies developing novel solutions including Zero Error Systems, Ramon Space, Resilient Computing and AAC Clyde Space amongst others.

Whilst the New Space industry has shown that it’s possible to leverage cheaper and higher performing COTS components when they’re employed in conjunction with various fault-mitigation measures, the effectiveness of this approach will ultimately be dependent on the satellite’s orbit, the associated levels of radiation it will be exposed to, and its target lifespan.

For the New Space applications mentioned earlier, a low earth orbit (LEO) is commonly needed to improve image resolution (EO), and optimise transmission latency and link budget (telecoms).

Orbiting at this altitude though results in a shorter satellite lifespan:

Because of this degradation, LEO satellites may experience a useful lifespan limited to ~5-7yrs (Starlink 5yrs; Kuiper 7yrs), whilst smaller CubeSats typically used for research purposes may only last a matter of months.

Given these short periods in service, the cumulative radiation exposure for LEO satellites will be relatively low (<30 krad), making it possible to design them with upscreened COTS components combined with fault management and some degree of shielding without too much risk of failure (and in the case of telecoms, the satellite constellations are complemented with spares should any fail).

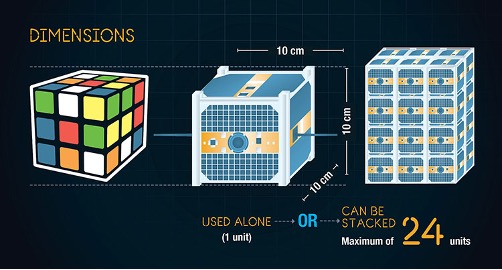

With CubeSats, where lifetime and hence radiation exposure is lower still (<10 krad), and the emphasis is often on minimising size and weight to reduce costs in manufacture and launch, dedicated shielding may not be used at all, with reliance being placed instead on radiation-tolerant design at the system level… although by taking such risks, CubeSats tend to be prone to more SEE-related errors and repeated reboots (possibly as much as every 3-6 weeks).

In the telecoms industry, there is growing interest in deploying services that support direct-to-device (D2D) 5G connectivity between satellites and mobile phones to provide rural coverage and emergency support, similar to Apple’s Emergency SOS service delivered via Globalstar‘s satellite constellation.

To-date, constellations such as Globalstar’s have relied on simple bent-pipe (‘transparent’) transponders to relay signals between basestations on the ground and mobile devices. Going forward, there is growing interest in moving the basestations up into the satellites themselves to deliver higher bandwidth connectivity (a ‘regenerative’ approach). Lockheed Martin, in conjunction with Accelercomm, Radisys and Keysight, have already demonstrated technical feasibility of a 5G system and plan a live trial in orbit later in 2024.

Source: Lockheed Martin

The next few years will see further deployments of 5G non-terrestrial networks (NTN) for IoT and consumer applications as suitable space-grade and space-tolerant processors for running gNodeB basestation software and the associated inter-satellite link (ISL) processing become more widely available, and the 3GPP R19 standards for the NTN regenerative payload approach are finalised.

New Space is driving a need for high levels of compute performance and rapid development using the latest technology, but is limited by strict budgetary constraints to achieve commercial viability. The space environment though is harsh, and delivering on these goals is challenging.

Radiation-hardening of electronic components is the gold standard, but expensive due to the lengthier design & testing cycles, and higher marginal cost of manufacturing due to the smaller volumes, and such an approach often needs to sacrifice compute performance to achieve higher levels of radiation immunity and longer lifespan.

COTS components can unlock higher levels of compute performance and rapid development, but are typically too vulnerable to radiation exposure to be used in space applications on their own for any appreciable length of time without risk of failure.

Radiation-tolerance can be improved through a process of upscreening components, dedicated shielding, and fault management measures within the system design. And this approach is typically sufficient for those New Space applications targeting LEO orbits which, due to the limited satellite lifespans at this altitude, and with some protection from the Earth’s atmosphere, experience doses of radiation low enough during their lifetime to be adequately mitigated by the rad-tolerant approach.

Satellites are set to become more intelligent, whether that be through running advanced communications software, or deriving insights from imaging data, or the use of AI for other New Space applications. This thirst for compute performance is being met by a mix of rad-tolerant (~30-50 krad) and rad-hard (>100 krad) processors and single-board computers (SBC) coming to market from startups (Aethero), more established companies (Vorago Technologies; Coherent Logix), and industry stalwarts such as BAE, as well as NASA’s own HPSC (in partnership with Microchip) offering both rad-tolerant (for LEO) and rad-hard RISC-V options launching in 2025.

The small satellite market is set to expand significantly over the coming decade with the growing democratisation of space, and the launch, and subsequent refresh, of the telecoms constellations – Starlink alone will likely refresh 2400 satellites a year (assuming 12k constellation; 5yr satellite lifespan), and could need even more if permitted to expand their constellation with another 30,000 satellites over the coming years.

As interest from the private sector in the commercialisation of space grows, it’s opening up a number of opportunities for startups to innovate and bring solutions to market that boost performance, increase radiation tolerance, and reduce cost.

Some such as EdgX are seeking to revolutionise compute and power efficiency through the use of neuromorphic computing, whilst others (Zero Error Systems; Apogee Semiconductor) are focused on developing software and IP libraries that can be integrated into future designs for radiation-tolerant components and systems, and others (Little Place Labs) are utilising onboard AI to transform satellite data near-realtime into actionable insights for rapid relay back to Earth.

Space has had many false dawns in the past, but the future for New Space, and the opportunities it presents for startups to innovate and disrupt the norms, are numerous.

Exploring the potential of photonics to improve efficiency in the future of compute

Exploring more esoteric approaches to the future of compute