The road to high-performance and robust satellite compute

Techniques for improving radiation tolerance for New Space high-performance compute.

Whilst security is undoubtedly important, fundamentally it’s a business case based on the time-value depreciation of the asset being protected, which in general leads to a design principle of “it’s good enough” and/or “it will only be broken in a given timeframe”.

At the other extreme, history has given us many examples where reliance on theoretical certainty fails due to unknowns. One such example being the Titanic which was considered by its naval architects as unsinkable. The unknown being the iceberg!

It is a simple fact that weaker randomness leads to weaker encryption, and with the inexorable rise of compute power due to Moore’s law, the barriers to breaking encryption are eroding. And now with the advent of the quantum-era, cyber-crime is about to enter an age in which encryption when done less than perfectly (i.e. lacking true randomness) will no longer be ‘good enough’ and become ever more vulnerable to attack.

In the following, Bloc’s Head of Research David Pollington takes a deeper dive into the landscape of secure communications and how it will need to evolve to combat the threat of the quantum-era. Bloc’s research findings inform decisions on investment opportunities.

Much has been written on quantum computing’s threat to encryption algorithms used to secure the Internet, and the robustness of public-key cryptography schemes such as RSA and ECDH that are used extensively in internet security protocols such as TLS.

These schemes perform two essential functions: securely exchanging keys for encrypting internet session data, and authenticating the communicating partners to protect the session against Man-in-the-Middle (MITM) attacks.

The security of these approaches relies on either the difficulty of factoring integers (RSA) or calculating discrete logarithms (ECDH). Whilst safe against the ‘classical’ computing capabilities available today, both will succumb to Shor’s algorithm on a sufficiently large quantum computer. In fact, a team of Chinese scientists have already demonstrated an ability to factor integers of 48bits with just 10 qubits using Schnorr’s algorithm in combination with a quantum approximate optimization to speed-up factorisation – projecting forwards, they’ve estimated that 372 qubits may be sufficient to crack today’s RSA-2048 encryption, well within reach over the next few years.

The race is on therefore to find a replacement to the incumbent RSA and ECDH algorithms… and there are two schools of thought: 1) Symmetric encryption + Quantum Key Distribution (QKD), or 2) Post Quantum Cryptography (PQC).

In contrast to the threat to current public-key algorithms, most symmetric cryptographic algorithms (e.g., AES) and hash functions (e.g., SHA-2) are considered to be secure against attacks by quantum computers.

Whilst Grover’s algorithm running on a quantum computer can speed up attacks against symmetric ciphers (reducing the security robustness by a half), an AES block-cipher using 256-bit keys is currently considered by the UK’s security agency NCSC to be safe from quantum attack, provided that a secure mechanism is in place for sharing the keys between the communicating parties – Quantum Key Distribution (QKD) is one such mechanism.

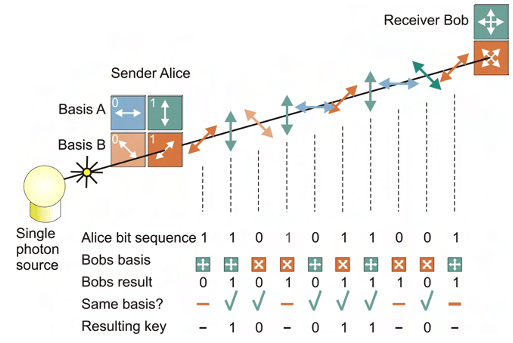

Rather than relying on the security of underlying mathematical problems, QKD is based on the properties of quantum mechanics to mitigate tampering of the keys in transit. QKD uses a stream of single photons to send each quantum state and communicate each bit of the key.

However, there are a number of implementation considerations that affect its suitability:

Integration complexity & cost

Distance constraints

Authentication

DoS attack

Rather than replacing existing public key infrastructure, an alternative is to develop more resilient cryptographic algorithms.

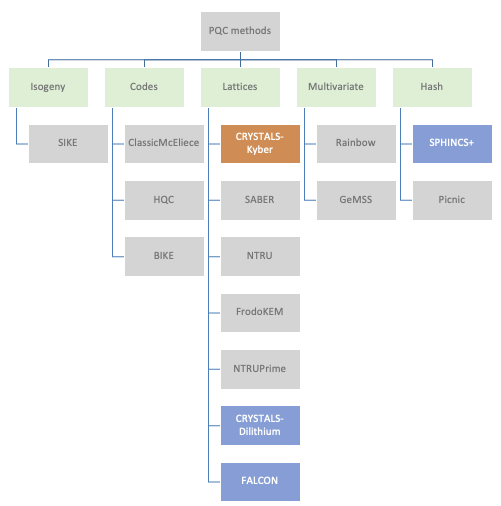

With that in mind, NIST have been running a collaborative activity with input from academia and the private sector (e.g., IBM, ARM, NXP, Infineon) to develop and standardise new algorithms deemed to be quantum-safe.

A number of mathematical approaches have been explored with a large variation in performance. Structured lattice-based cryptography algorithms have emerged as prime candidates for standardisation due to a good balance between security, key sizes, and computational efficiency. Importantly, it has been shown that lattice-based algorithms can be implemented on low-power IoT edge devices (e.g., using Cortex M4) whilst maintaining viable battery runtimes.

Four algorithms have been short-listed by NIST: CRYSTALS-Kyber for key establishment, CRYSTALS-Dilithium for digital signatures, and then two additional digital signature algorithms as fallback (FALCON, and SPHINCS+). SPHINCS+ is a hash-based backup in case serious vulnerabilities are found in the lattice-based approach.

NIST aims to have the PQC algorithms fully standardised by 2024, but have released technical details in the meantime so that security vendors can start working towards developing end-end solutions as well as stress-testing the candidates for any vulnerabilities. A number of companies (e.g., ResQuant, PQShield and those mentioned earlier) have already started developing hardware implementations of the two primary algorithms.

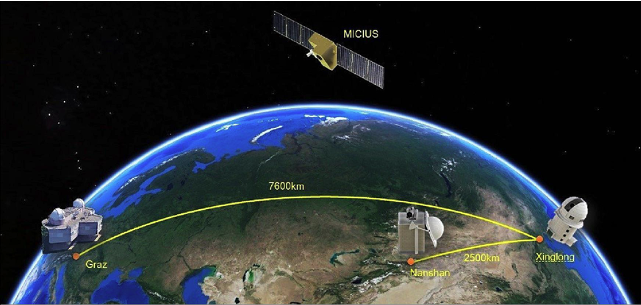

QKD has made slow progress in achieving commercial adoption, partly because of the various implementation concerns outlined above. China has been the most active, the QUESS project in 2016 creating an international QKD satellite channel between China and Vienna, and in 2017 the completion of a 2000km fibre link between Beijing and Shanghai. The original goal of commercialising a European/Asian quantum-encrypted network by 2020 hasn’t materialised, although the European Space Agency is now aiming to launch a quantum satellite in 2024 that will spend three years in orbit testing secure communications technologies.

BT has recently teamed up with EY (and BT’s long term QKD tech partner Toshiba) on a two year trial interconnecting two of EY’s offices in London, and Toshiba themselves have been pushing QKD in the US through a trial with JP Morgan.

Other vendors in this space include ID Quantique (tech provider for many early QKD pilots), UK-based KETS, MagiQ, Qubitekk, Quintessance Labs and QuantumCtek (commercialising QKD in China). An outlier is Arqit; a QKD supporter and strong advocate for symmetric encryption that addresses many of the QKD implementation concerns through its own quantum-safe network and has partnered with Virgin Orbit to launch five QKD satellites, beginning in 2023.

Given the issues identified with QKD, both the UK (NCSC) and US (NSA) security agencies have so far discounted QKD for use in government and defence applications, and instead are recommending post-quantum cryptography (PQC) as the more cost effective and easily maintained solution.

There may still be use cases (e.g., in defence, financial services etc.) where the parties are in fixed locations, secrecy needs to be guaranteed, and costs are not the primary concern. But for the mass market where public-key solutions are already in widespread use, the best approach is likely to be adoption of post-quantum algorithms within the existing public-key frameworks once the algorithms become standardised and commercially available.

Introducing the new cryptographic algorithms though will have far reaching consequences with updates needed to protocols, schemes, and infrastructure; and according to a recent World Economic Forum report, more than 20 billion digital devices will need to be upgraded or replaced.

Widespread adoption of the new quantum-safe algorithms may take 10-15 years, but with the US, UK, French and German security agencies driving the use of post-quantum cryptography, it’s likely to become defacto for high security use cases in government and defence much sooner.

Organisations responsible for critical infrastructure are also likely to move more quickly – in the telco space, the GSMA, in collaboration with IBM and Vodafone, have recently launched the GSMA Post-Quantum Telco Network Taskforce. And Cloudflare has also stepped up, launching post-quantum cryptography support for all websites and APIs served through its Content Delivery Network (19+% of all websites worldwide according to W3Techs).

Irrespective of which encryption approach is adopted, their efficacy is ultimately dependent on the strength of the cryptographic key used to encrypt the data. Any weaknesses in the random number generators used to generate the keys can have catastrophic results, as was evidenced by the ROCA vulnerability in an RSA key generation library provided by Infineon back in 2017 that resulted in 750,000 Estonian national ID cards being compromised.

Encryption systems often rely upon Pseudo Random Number Generators (PRNG) that generate random numbers using mathematical algorithms, but such an approach is deterministic and reapplication of the seed generates the same random number.

True Random Number Generators (TRNGs) utilise a physical process such as thermal electrical noise that in theory is stochastic, but in reality is somewhat deterministic as it relies on post-processing algorithms to provide randomness and can be influenced by biases within the physical device. Furthermore, by being based on chaotic and complex physical systems, TRNGs are hard to model and therefore it can be hard to know if they have been manipulated by an attacker to retain the “quality of the randomness” but from a deterministic source.Ultimately, the deterministic nature of PRNGs and TRNGs opens them up to quantum attack.

A further problem with TRNGs for secure comms is that they are limited to either delivering high entropy (randomness) or high throughput (key generation frequency) but struggle to do both. In practise, as key requests ramp to serve ever-higher communication data rates, even the best TRNGs will reach a blocking rate at which the randomness is exhausted and keys can no longer be served. This either leads to downtime within the comms system, or the TRNG defaults to generating keys of 0 rendering the system highly insecure; either eventuality results in the system becoming highly susceptible to denial of service attacks.

Quantum Random Number Generators (QRNGs) are a new breed of RNGs that leverage quantum effects to generate random numbers. Not only does this achieve full entropy (i.e., truly random bit sequences) but importantly can also deliver this level of entropy at a high throughput (random bits per second) hence ideal for high bandwidth secure comms.

Having said that, not all QRNGs are created equal – in some designs, the level of randomness can be dependent on the physical construction of the device and/or the classical circuitry used for processing the output, either of which can result in the QRNG becoming deterministic and vulnerable to quantum attack in a similar fashion to the PRNG and TRNG. And just as with TRNGs, some QRNGs can run out of entropy at high data rates leading to system failure or generation of weak keys.

Careful design and robustness in implementation is therefore vital – Crypta Labs have been pioneering in quantum tech since 2014 and through their research have designed a QRNG that can deliver hundreds of megabits per second of full entropy whilst avoiding these implementation pitfalls.

Whilst time estimates vary, it’s considered inevitable that quantum computers will eventually reach sufficient maturity to beat today’s public-key algorithms – prosaically dubbed Y2Q. The Cloud Security Alliance (CSA) have started a countdown to April 14 2030 as the date by which they believe Y2Q will happen.

QKD was the industry’s initial reaction to counter this threat, but whilst meeting the security need at a theoretical level, has arguably failed to address implementation concerns in a way which is cost effective, scalable and secure for the mass market, at least to the satisfaction of NCSC and NSA.

Proponents of QKD believe key agreement and authentication mechanisms within public-key schemes can never be fully quantum-safe, and to a degree they have a point given the recent undermining of Rainbow, one of the short-listed PQC candidates. But QKD itself is only a partial solution.

The collaborative project led by NIST is therefore the most likely winner in this race, and especially given its backing by both the NSA and NCSC. Post-quantum cryptography (PQC) appears to be inherently cheaper, easier to implement, and deployable on edge devices, and can be further strengthened through the use of advanced QRNGs. Deviating away from the current public-key approach seems unnecessary compared to swapping out the current algorithms for the new PQC alternatives.

Setting aside the quantum threat to today’s encryption algorithms, an area ripe for innovation is in true quantum communications, or quantum teleportation, in which information is encoded and transferred via the quantum states of matter or light.

It’s still early days, but physicists at QuTech in the Netherlands have already demonstrated teleportation between three remote, optically connected nodes in a quantum network using solid-state spin qubits.

Longer term, the goal is to create a ‘quantum internet’ – a network of entangled quantum computers connected with ultra-secure quantum communication guaranteed by the fundamental laws of physics.

When will this become a reality? Well, as with all things quantum, the answer is typically ‘sometime in the next decade or so’… let’s see.

Techniques for improving radiation tolerance for New Space high-performance compute.

GenAI’s pace has been astonishing, but challenges remain in improving its knowledge, understanding, reasoning and scaling.