The New Space era

The space industry has seen rapid change over the past decade with increased involvement from the private sector dramatically reducing costs through innovation, and democratising access for new commercial customers and market entrants – the New Space era.

Telecoms in particular has seen recent growth with the likes of Starlink, Kuiper, Globalstar, Iridium and others all vying to offer a mix of telecoms, messaging and internet services either to dedicated IoT devices and consumer equipment in the home or direct to mobile phones through 5G non-terrestrial networks (NTN).

Earth Observation (EO) is also going through a period of transformation. Whilst applications such as weather forecasting, precision farming and environmental monitoring have traditionally involved data being sent back to Earth for processing, the latest EO satellites and hyperspectral sensors gather far more data than can be quickly downlinked and hence there is a movement towards processing the data in orbit, and only relaying the most important and valuable insights back to Earth; for instance, using AI to spot ships and downlink their locations, sizes and headings rather than transferring enormous logs of ocean data.

Both applications are dependent on higher levels of compute to move signal processing up into the satellites, and rapid development and launch cycles to gain competitive advantage whilst delivering on a constrained budget shaped by commercial opportunity rather than government funding.

Space is harsh

The space environment though is harsh, with exposure to ionising radiation degrading satellite electronics over time, and high-energy particles from Galactic Cosmic Rays (GCR) and solar flares striking the satellite causing single event effects (SEE) that can ‘upset’ circuits and potentially lead to catastrophic failure.

The cumulative damage is known as the total ionising dose (TID) and has the effect of gradually shifting semiconductor gate biasing over time to a point where the transistors remain permanently open (or closed) leading to component failure.

In addition, the high-energy particles cause ‘soft errors’ such as a glitch in output or a bit flip in memory which corrupts data, potentially causing a crash that requires a system reboot; worst case, they can cause a destructive latch-up and burnout due to overcurrent.

Radiation-hardening

The preferred method for reducing these risks is to use components that have been radiation-hardened (‘rad-hard’).

Historically this involved using exotic process technologies such as silicon carbide or gallium nitride to provide high levels of immunity, but such an approach results in slower development time and significantly higher cost that can’t be justified beyond mission-critical and deep-space missions.

Radiation-Hardening By Design (RHBD) is now more common for developing space-grade components and involves a number of steps, such as changes in the substrate for chip fabrication to lower manufacturing costs, switching from ceramic to plastic packaging to reduce size and cost, and specific design & layout changes to improve tolerance to radiation. One such design change is the adoption of triple modular redundancy (TMR) in which mission critical data is stored and processed in three separate locations on the chip, with the output only being used if two or more responses agree.

These measures in combination can provide protection against radiation dose levels (TID) in the 100-300 krad range – not as high as for fully rad-hard mission-critical components (1000 krad+), but more than sufficient for enabling multi-year operation with manageable risk, and at lower cost.

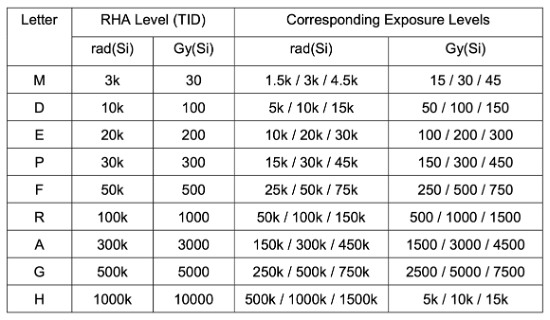

ESCC radiation hardness assurance (RHA) qualification levels

Having said that, they still cost much more than their commercial-off-the-shelf (COTS) equivalents due to larger die sizes, numerous design & test cycles, and smaller manufacturing volumes. RHBD components also tend to shy away from employing the latest semiconductor technology (smaller CMOS feature sizes, higher clock rates etc.) in order to maximise radiation tolerance, but in doing so sacrifice compute performance and power efficiency.

For today’s booming space industry, it will be challenging for space-grade components alone to keep pace with the required speed of innovation, high levels of compute, and constrained budgets driven by many New Space applications.

The challenge therefore is to design systems using COTS components that are sufficiently radiation-tolerant for the intended satellite lifespan, yet still able to meet the performance and cost goals. But this is far from easy, and the march to ever smaller process nodes (e.g., <7nm) to increase compute performance and lower power consumption are making COTS components increasingly vulnerable to the effects of radiation.

Radiation-tolerance

Over the past decade, the New Space industry has experimented with numerous approaches, but in essence the path most commonly taken to achieve the desired level of radiation tolerance for small satellites is through a combination of upscreening COTS components, dedicated shielding, and novel system design.

Upscreening

The radiation-tolerance of COTS components varies based on their internal design & process node, intended usage, and variations and imperfections in the chip-manufacturing process.

Typically withstanding radiation dose levels (TID) in the 5-10 krad range before malfunction, some components, such as those designed for automotive or medical applications and manufactured using larger process nodes (e.g., >28nm), may be tolerant up to 30-50 krad or even higher whilst also being better able at coping with the vibration, shock and temperature variations of space.

A process of upscreening can therefore be used to select components with better intrinsic radiation tolerance, and then filter out those with the highest tolerances within a batch.

Whilst this addresses the degradation over time due to ionising radiation, the components will still be vulnerable to single event effects (SEE) caused by high-energy particles, and even more so if they are based on smaller process nodes and pack billions of transistors into each chip.

Shielding

Shielding would seem the obvious answer, and can reduce the radiation dose levels whilst protecting to some extent against the particles that cause SEE soft errors.

Typically achieved using aluminium, shield performance can be improved by increasing its thickness and/or coating with a high-density metal such as tantalum. However, whilst aluminium shielding is generally effective, it’s less useful with some high-energy particles that can scatter on impact and shower the component with more radiation.

The industry has more recently been experimenting with novel materials, one example being the 3D-printed nanocomposite metal material pioneered by Cosmic Shielding Corporation that is capable of reducing the radiation dose rate by 60-70% whilst also being more effective at limiting SEE.

Concept image of a custom plasteel shielding solution for a space-based computer (CSC)

System design

Careful component selection and shielding can go a long way towards achieving radiation tolerance, but equally important is to design in a number of fault management mechanisms within the overall system to cope with the different types of soft error.

Redundancy

Similar to TMR, triple redundancy can be employed at the system level to spot and correct processing errors, and shut down a faulty board where needed. SpaceX follows such an approach, using triple-redundancy within its Merlin engine computers, with each constantly checking on the others as part of a fault-tolerant design.

System reset

More widely within the system, background analysis and watchdog timers can be used to spot when a part of the system has hung or is acting erroneously and reboot accordingly to (hopefully) clear the error.

Error correction

To mitigate the risk of data becoming corrupted in memory due to a single event upset (SEU), “scrubber” circuits utilising error-detection and correction codes (EDAC) can be used to continuously sweep the memory, checking for errors and writing back any corrections.

Latch-up protection

Latch-ups are a particular issue and can result in a component malfunctioning or even being permanently damaged due to overcurrent, but again can be mitigated to some extent through the inclusion of latch-up protection circuitry within the system to monitor and reset the power when a latch-up is detected.

This is a vibrant area for innovation within the New Space industry with a number of companies developing novel solutions including Zero Error Systems, Ramon Space, Resilient Computing and AAC Clyde Space amongst others.

Design trade-offs

Whilst the New Space industry has shown that it’s possible to leverage cheaper and higher performing COTS components when they’re employed in conjunction with various fault-mitigation measures, the effectiveness of this approach will ultimately be dependent on the satellite’s orbit, the associated levels of radiation it will be exposed to, and its target lifespan.

For the New Space applications mentioned earlier, a low earth orbit (LEO) is commonly needed to improve image resolution (EO), and optimise transmission latency and link budget (telecoms).

Orbiting at this altitude though results in a shorter satellite lifespan:

- More residual atmosphere and microscopic debris slow the satellite down and require it to burn more propellant to maintain orbit

- Debris also gradually degrades the performance of the solar panels

- More time is spent in the Earth’s shadow, thereby causing batteries to go through multiple discharge/recharge cycles per day which reduces their lifetime

Because of this degradation, LEO satellites may experience a useful lifespan limited to ~5-7yrs (Starlink 5yrs; Kuiper 7yrs), whilst smaller CubeSats typically used for research purposes may only last a matter of months.

Given these short periods in service, the cumulative radiation exposure for LEO satellites will be relatively low (<30 krad), making it possible to design them with upscreened COTS components combined with fault management and some degree of shielding without too much risk of failure (and in the case of telecoms, the satellite constellations are complemented with spares should any fail).

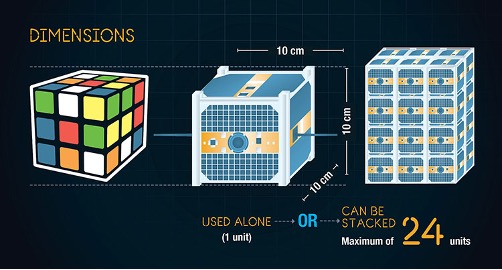

With CubeSats, where lifetime and hence radiation exposure is lower still (<10 krad), and the emphasis is often on minimising size and weight to reduce costs in manufacture and launch, dedicated shielding may not be used at all, with reliance being placed instead on radiation-tolerant design at the system level… although by taking such risks, CubeSats tend to be prone to more SEE-related errors and repeated reboots (possibly as much as every 3-6 weeks).

Spotlight on telecoms

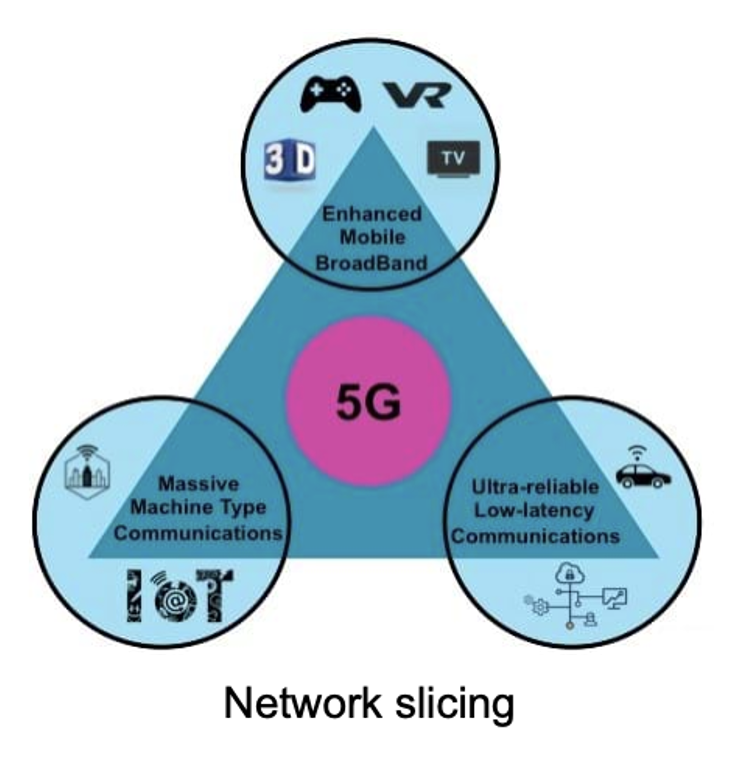

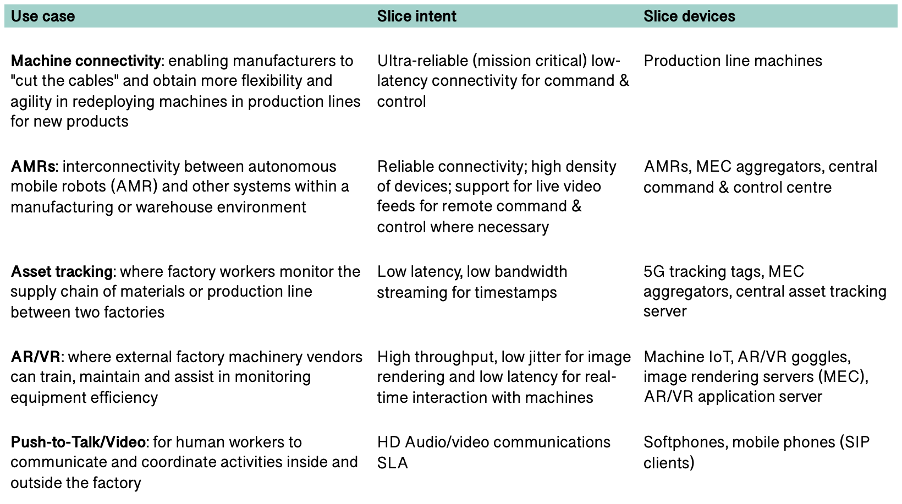

In the telecoms industry, there is growing interest in deploying services that support direct-to-device (D2D) 5G connectivity between satellites and mobile phones to provide rural coverage and emergency support, similar to Apple’s Emergency SOS service delivered via Globalstar‘s satellite constellation.

To-date, constellations such as Globalstar’s have relied on simple bent-pipe (‘transparent’) transponders to relay signals between basestations on the ground and mobile devices. Going forward, there is growing interest in moving the basestations up into the satellites themselves to deliver higher bandwidth connectivity (a ‘regenerative’ approach). Lockheed Martin, in conjunction with Accelercomm, Radisys and Keysight, have already demonstrated technical feasibility of a 5G system and plan a live trial in orbit later in 2024.

Source: Lockheed Martin

The next few years will see further deployments of 5G non-terrestrial networks (NTN) for IoT and consumer applications as suitable space-grade and space-tolerant processors for running gNodeB basestation software and the associated inter-satellite link (ISL) processing become more widely available, and the 3GPP R19 standards for the NTN regenerative payload approach are finalised.

Takeaways

New Space is driving a need for high levels of compute performance and rapid development using the latest technology, but is limited by strict budgetary constraints to achieve commercial viability. The space environment though is harsh, and delivering on these goals is challenging.

Radiation-hardening of electronic components is the gold standard, but expensive due to the lengthier design & testing cycles, and higher marginal cost of manufacturing due to the smaller volumes, and such an approach often needs to sacrifice compute performance to achieve higher levels of radiation immunity and longer lifespan.

COTS components can unlock higher levels of compute performance and rapid development, but are typically too vulnerable to radiation exposure to be used in space applications on their own for any appreciable length of time without risk of failure.

Radiation-tolerance can be improved through a process of upscreening components, dedicated shielding, and fault management measures within the system design. And this approach is typically sufficient for those New Space applications targeting LEO orbits which, due to the limited satellite lifespans at this altitude, and with some protection from the Earth’s atmosphere, experience doses of radiation low enough during their lifetime to be adequately mitigated by the rad-tolerant approach.

Satellites are set to become more intelligent, whether that be through running advanced communications software, or deriving insights from imaging data, or the use of AI for other New Space applications. This thirst for compute performance is being met by a mix of rad-tolerant (~30-50 krad) and rad-hard (>100 krad) processors and single-board computers (SBC) coming to market from startups (Aethero), more established companies (Vorago Technologies; Coherent Logix), and industry stalwarts such as BAE, as well as NASA’s own HPSC (in partnership with Microchip) offering both rad-tolerant (for LEO) and rad-hard RISC-V options launching in 2025.

The small satellite market is set to expand significantly over the coming decade with the growing democratisation of space, and the launch, and subsequent refresh, of the telecoms constellations – Starlink alone will likely refresh 2400 satellites a year (assuming 12k constellation; 5yr satellite lifespan), and could need even more if permitted to expand their constellation with another 30,000 satellites over the coming years.

As interest from the private sector in the commercialisation of space grows, it’s opening up a number of opportunities for startups to innovate and bring solutions to market that boost performance, increase radiation tolerance, and reduce cost.

Some such as EdgX are seeking to revolutionise compute and power efficiency through the use of neuromorphic computing, whilst others (Zero Error Systems; Apogee Semiconductor) are focused on developing software and IP libraries that can be integrated into future designs for radiation-tolerant components and systems, and others (Little Place Labs) are utilising onboard AI to transform satellite data near-realtime into actionable insights for rapid relay back to Earth.

Space has had many false dawns in the past, but the future for New Space, and the opportunities it presents for startups to innovate and disrupt the norms, are numerous.

An overview of GNSS

Global Navigation Satellite Systems (GNSS) such as GPS, Galileo, GLONASS and BeiDou are constellations of satellites that transmit positioning and timing data. This data is used across an ever-widening set of consumer and commercial applications ranging from precision mapping, navigation and logistics (aerial, ground and maritime) to enable autonomous unmanned flight and driving. Such data is also fundamental to critical infrastructure through the provision of precise time (UTC) for synchronisation of telecoms, power grids, the internet and financial networks.

Whilst simple SatNav applications can be met with relatively cheap single-band GNSS receivers and patch antennas in smartphones, their positioning accuracy is limited to ~5m. Much more commercial value can be unlocked by increasing the accuracy to cm-level, thereby enabling new applications such as high precision agriculture and by improving resilience in the face of multi-path interference or poor satellite visibility to increase availability. Advanced Driver Assistance Systems (ADAS), in particular, are dependent on high precision to assist in actions such as changing lanes. And in the case of Unmanned Aerial Vehicles (UAVs) aka drones, especially those operating beyond visual line of sight (BVLoS), GNSS accuracy and resilience are equally mission critical.

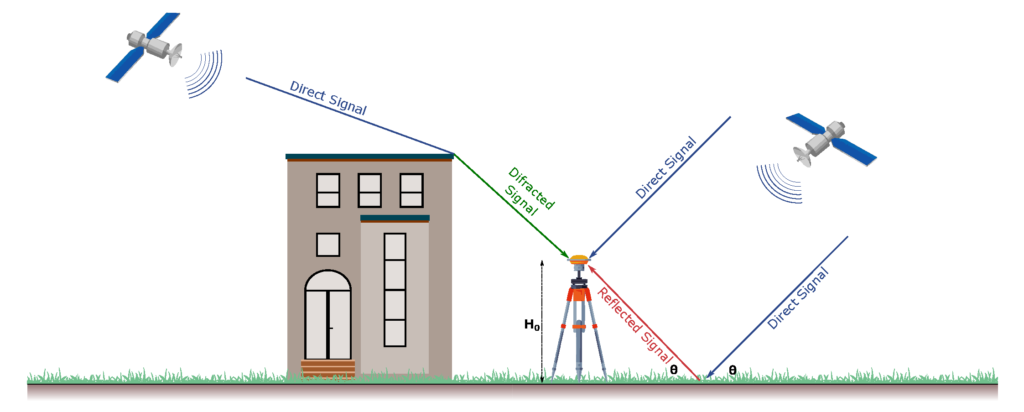

GNSS receivers need unobstructed line-of-sight to at least four satellites to generate a location fix, and even more for cm-level positioning. Buildings, bridges and trees can either block signals or cause multi-path interference thereby forcing the receiver to fallback to less-precise GNSS modes and may even lead to complete loss of signal tracking and positioning. Having access to more signals by utilising multiple GNSS bands and/or more than one GNSS constellation can make a big difference in reducing position acquisition time and improving accuracy.

Source: https://www.mdpi.com/1424-8220/22/9/3384

Interference and GNSS spoofing

Interference in the form of jamming and spoofing can be generated intentionally by bad actors, and is a growing threat. In the case of UAVs, malicious interference to jam the GNSS receiver can force the UAV to land; whilst GNSS spoofing, in which a fake signal is generated to offset the measured position, directs the UAV off its planned course to another location; the intent being to hijack the payload.

The sophistication of such attacks has historically been limited to military scenarios and been mitigated by expensive protection systems. But with the growing availability of cheap and powerful software defined radio (SDR) equipment, civil applications and critical infrastructure reliant on precise timing are equally becoming vulnerable to such attacks. As such, being able to determine the direction of incoming signals and reject those not originating from the GNSS satellites is an emerging challenge.

Importance of GNSS antennas and design considerations

GNSS signals are extremely weak, and can be as low as 1/1000th of the thermal noise. Therefore, the performance of the antenna being first in the signal processing chain, is of paramount importance to achieving high signal fidelity. This is even more important where the aim is to employ phase measurements of the carrier signal to further increase GNSS accuracy.

However, more often than not the importance of the antenna is overlooked or compromised by cost pressures, often resulting in the use of simple patch antennas. This is a mistake, as doing so dramatically increases the demands on the GNSS receiver, resulting in higher power consumption and lower performance.

In comparison to patch antennas, a balanced helical design with its circular polarisation is capable of delivering much higher signal quality and with superior rejection of multi-path interference and common mode noise. These factors, combined with the design’s independence of ground plane effects, results in a superior antenna plus an ability to employ carrier phase measurements resulting in positional accuracy down to 10cm or better. This is simply not possible with patch antennas in typical scenarios such as on a metal vehicle roof or a drone’s wing.

The SWAP-C challenge

Size is also becoming a key issue – as GNSS becomes increasingly employed across a variety of consumer and commercial applications, there is growing demand to miniaturise GNSS receivers. Consequently, Size, Weight and Power (SWAP-C) are becoming the key factors for the system designer, as well as cost.

UAV GNSS receivers, for example, often need to employ multiple antennas to accurately determine position and heading, and support multi-band/multi-constellation to improve resiliency. But in parallel they face size and weight constraints, plus the antennas must be deployed in close proximity without suffering from near-field coupling effects or in-band interference from nearby high-speed digital electronics on the UAV. As drones get smaller (e.g. quadcopter delivery or survey drones) the SWAP-C challenge only increases further.

Aesthetics also comes into play – very similar to the mobile phone evolution from whip-lash antenna to highly integrated smartphone, the industrial designer is increasingly influencing the end design of GNSS equipment. This influence places further challenges on the RF design and particularly where design aesthetics require the antenna to be placed inside an enclosure.

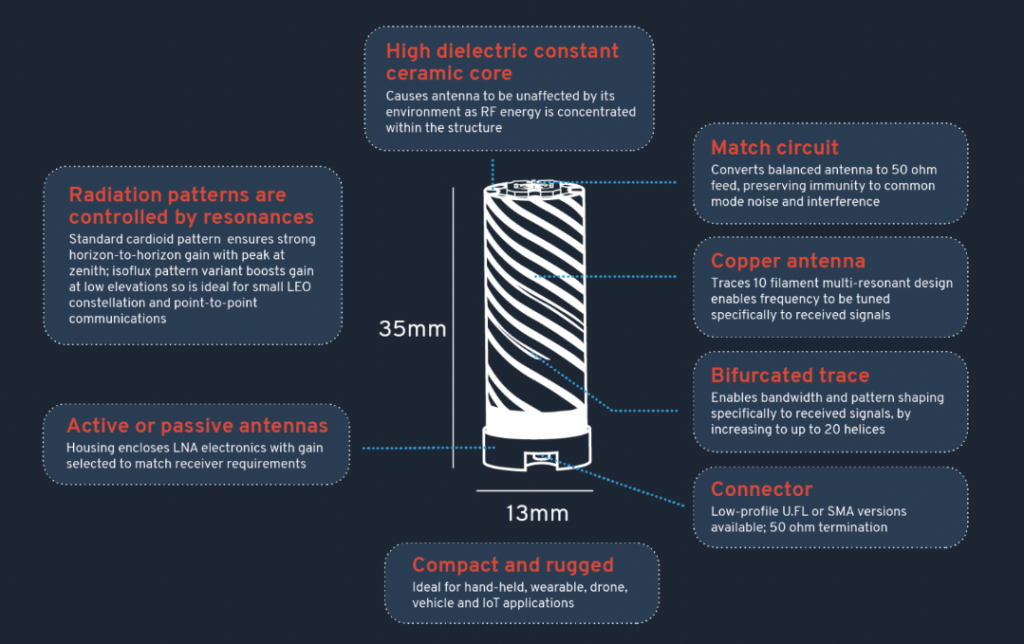

The manufacturing challenge

Optimal GNSS performance is achieved through the use of ceramic cores within the helical antenna. However doing so raises a number of manufacturing yield challenges. As a result of material and dimensional tolerances, the cores have a “relative dielectric mass” and so the resonance frequency can vary between units. This can lead to excessive binning in order to deliver antennas that meet the final desired RF performance. Production yield could be improved with high precision machining and tuning, but such an approach results in significantly higher production costs.

Many helical antenna manufacturers have therefore limited their designs to using air-cores to simplify production, whereby each antenna is 2D printed and folded into the 3D form of a helical antenna. This is then manually soldered and tuned, an approach which could be considered ‘artisanal’ by modern manufacturing standards. This also results in an antenna that is larger, less mechanically robust, and more expensive, and generally less performant in diverse usage scenarios.

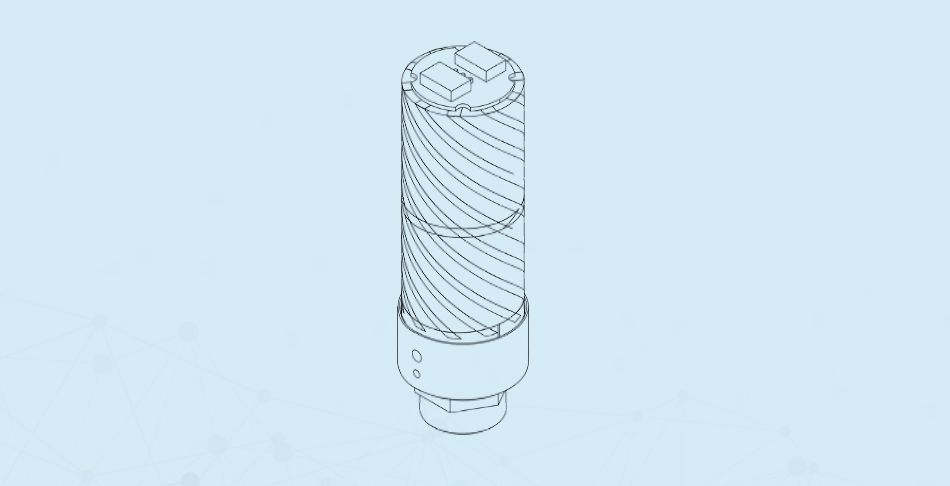

Introducing Helix Geospace

Helix Geospace excels in meeting these market needs with its 3D printed helical antennas using novel ceramics to deliver a much smaller form factor that is also electrically small thereby mitigating coupling issues. The ceramic dielectric loaded design delivers a much tighter phase centre for precise position measurements and provides a consistent radiation pattern to ensure signal fidelity and stability regardless of the satellites’ position relative to the antenna and its surroundings.

In Helix’s case, they overcome the ‘artisanal’ challenge of manufacturing through the development of an AI-driven 3D manufacturing process which automatically assesses the material variances of each ceramic core. The adaptive technology compensates by altering the helical pattern that is printed onto it, thereby enabling the production of high-performing helical antennas at volume.

This proprietary technique makes it possible to produce complex multi-band GNSS antennas on a single common dielectric core – multi-band antennas are increasingly in demand as designers seek to produce universal GNSS receiver systems for global markets. The ability to produce multi-band antennas is transformative, enabling a marginal cost of production that meets the high performance requirements of the market, but at a price point that opens up the use of helical antennas to a much wider range of price sensitive consumer and commercial applications.

In summary

As end-users and industry seek higher degrees of automation, there is growing pressure on GNSS receiver systems to evolve towards cm-level accuracy whilst maintaining high levels of resiliency, and in form factors small and light enough for widespread adoption.

By innovating in its manufacturing process, Helix is able to meet this demand with GNSS antennas that deliver a high level of performance and resilience at a price point that unlocks the widest range of consumer and commercial opportunities be that small & high precision antennas for portable applications, making cm-level accuracy a possibility within ADAS solutions, or miniaturised anti-jamming CRPA arrays suitable for mission critical UAVs as small as quadcopters (or smaller!).

Random numbers are used everywhere, from facilitating lotteries, to simulating the weather or behaviour of materials, and ensuring secure data exchange between infrastructure and vehicles in intelligent transport systems (C-ITS).

Perhaps most importantly though, randomness is at the core of the security we all rely upon for transacting safely on the internet. Specifically, random numbers are used in the creation of secure cryptographic keys for encrypting data to safeguard its confidentiality, integrity and authenticity.

Random numbers are provided via random number generators (RNGs) that utilise a source of entropy (randomness) and an algorithm to generate random numbers. A number of RNG types exist based on the method of implementation (e.g. hardware and/or software).

Pseudo-random number generators (PRNGs)

PRNGs that rely purely on software can be cost-effective, but are intrinsically deterministic and given the same seed will produce the exact same sequence of random numbers (and due to memory constraints, this sequence will eventually repeat) – whilst the output of the PRNG may be statistically random, its behaviour is entirely predictable.

An attacker able to guess which PRNG is being used can deduce its state by observing the output sequence and thereby predict each random number – and this can be as small as 624 observations in the case of common PRNGs such as the Mersenne Twister MT19937. Moreover, an unfortunate choice of seed can lead to a short cycle length before the number sequence repeats which again opens the PRNG to attack. In short, PRNGs are inherently vulnerable and far from ideal for cryptography.

True random number generators (TRNGs)

TRNGs extract randomness (entropy) from a physical source and use this to generate a sequence of random numbers that in theory are highly unpredictable. Certainly they address many of the shortcomings of PRNGs, but they’re not perfect either.

In many TRNGs, the entropy source is based on thermal or electrical noise, or jitter in an oscillator, any of which can be manipulated by an attacker able to control the environment in which the TRNG is operating (e.g., temperature, EMF noise or voltage modulation).

Given the need to sample the entropy source, a TRNG can be slow in operation, and fundamentally limited by the nature of the entropy pool – a poorly designed implementation or choice of entropy can result in the entropy pool quickly becoming exhausted. In such a scenario, the TRNG has the choice of either reducing the amount of entropy used for generating each random number (compromising security) or scaling back the number of random numbers it generates.

Either situation could result in the same or similar random numbers being output until the entropy pool is replenished – a serious vulnerability that can be addressed through in-built health checks, but with the risk that output is ceased hence opening the TRNG up to denial-of-service attacks. Ring-oscillator based TRNGs, for instance, have a hard limit in how fast they can be run, and if more entropy is sought by combining a few in parallel they can produce similar outputs hence undermining their usefulness.

IoT devices in particular often have difficulty gathering sufficient entropy during initialisation to generate strong cryptographic keys given the lack of entropy sources in these simple devices, hence can be forced to use hard-coded keys, or seed the RNG from unique (but easy to guess) identifiers such as the device’s MAC address, both of which seriously undermines security robustness.

Ideally, RNGs need an entropy source that is completely unpredictable and chaotic, not influenced by external environmental factors, able to provide random bits in abundance to service a large volume of requests and facilitate stronger keys, and service these requests quickly and at high volume.

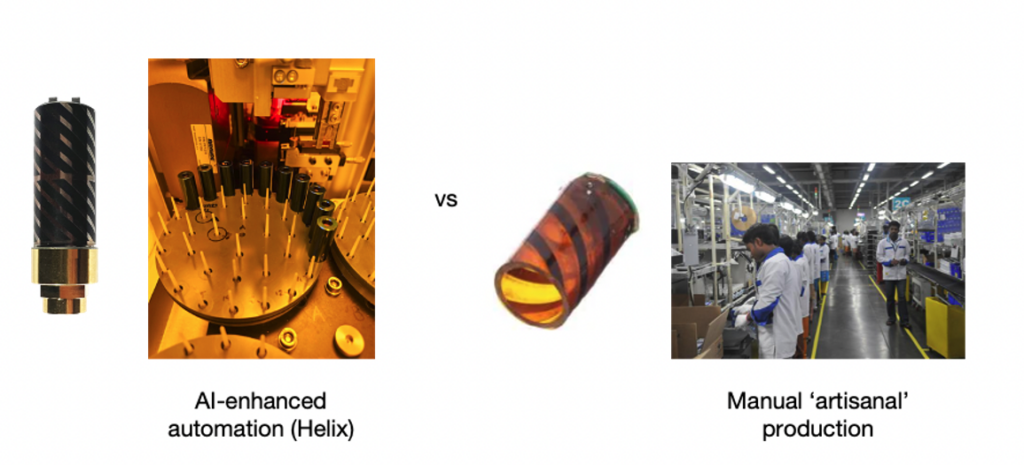

Quantum random number generators (QRNGs)

QRNGs are a special class of RNG that utilise Heisenberg’s Uncertainty Principle (an inability to know the position and speed of a photon or electron with perfect accuracy) to provide a pure source of entropy and therefore address all the aforementioned requirements.

Not only do they provide a provably random entropy source based on the laws of physics, QRNGs are also intrinsically high entropy hence able to deliver truly random bit sequences and at high speed thereby enabling QRNGs to run much faster than other TRNGs, and more efficiently than PRNGs across high volume applications.

They are also more resistant to environmental factors, and thereby at less risk from external manipulation, whilst also being able to operate reliably in EMF noisy environments such as data centres for serving random numbers to thousands of servers realtime.

But not all QRNGs are created equal; poor design in the physical construction and/or processing circuitry can compromise randomness or reduce the level of entropy resulting in system failure at high volumes. QRNG designs embracing sophisticated silicon photonics in an attempt to create high entropy sources can become cost prohibitive in comparison to established RNGs, whilst other designs often have size and heat constraints.

Introducing Crypta Labs

Careful design and robustness in implementation is therefore vital – Crypta Labs have been pioneering in quantum technology since 2014 and through their research have developed a unique solution utilising readily available components that makes use of quantum photonics as a source of entropy to produce a state-of-the-art QRNG capable of delivering quantum random numbers at very high speeds and easily integrated into existing systems. Blueshift Memory is an early adopter of the technology, creating a cybersecurity memory solution that will be capable of countering threats from quantum computing.

Rapid advances in compute power are undermining traditional cryptographic approaches and exploiting any weakness; even a slight imperfection in the random number generation can be catastrophic. Migrating to QRNGs reduces this threat and provides resiliency against advances in classical compute and the introduction of basic quantum computers expected over the next few years. In time, quantum computers will advance sufficiently to break the encryption algorithms themselves, but such computers will require tens of millions of physical qubits and therefore are unlikely to materialise for another 10 years or more.

Post-quantum cryptography (PQC) algorithms

In preparation for this quantum future, an activity spearheaded by NIST in the US with input from academia and the private sector (e.g., IBM, ARM, NXP, Infineon) is developing a set of PQC algorithms that will be safe against this threat. A component part of ensuring these PQC algorithms are quantum-safe involves moving to much larger key sizes, hence a dependency on QRNGs able to deliver sufficiently high entropy at scale.

In summary, a transition by hardware manufacturers (servers, firewalls, routers etc.) to incorporate QRNGs at the board level addresses the shortcomings of existing RNGs whilst also providing quantum resilience for the coming decade. Not only are they being adopted by large corporates such as Alibaba, they also form a component part of the White House’s strategy to combat the quantum threat in the US.

Given that QRNGs are superior to other TRNGs, can contribute to future-proofing cryptography for the next decade, and are now cost effective and easy to implement in the case of Crypta Lab’s solution, they really are a no-brainer.

A key tenet of the Bloc Ventures investment strategy is to back companies that have science-based IP, with the potential to impact a broad set of industries and so are less affected by hype cycles or momentum.

A proof point of this strategy is AccelerComm, which has today announced a $27m Series B funding round with new investment from Parkwalk, SwissComm and Hostplus, as well as continued support from Bloc Ventures, IP Group and IQ Capital. The confidence shown by the investor community is testament to the technology, the team and the resilient nature of deep tech.

What does AccelerComm do?

Spun-out from the University of Southampton, the company was founded by Professor Rob Maunder and is driven by a leadership team with executive experience gained at ARM, Qualcomm and Ericsson. AccelerComm has established itself as a leader in the optimisation of communication networks, and is already working with Intel, AMD and National Instruments to name a few. When applied to 5G, the company’s world-leading channel coding technology enables the most efficient use of radio spectrum, improving coverage and increasing capacity, whilst reducing latency and power consumption.

What is key to AccelerComm’s success is that they have achieved a strong product-market fit for the technology. Far too often there’s a great idea but no customer. Another fundamental part of the journey (something that we feel is key to spinout success) has been the commitment of the academic founder Rob Maunder, who joined the company on a full-time basis early on its journey.

By applying its technological advancement to a technology economic problem (increasing consumption vs the cost of the network), the company has been able to acquire new customers, demonstrate a growing market and consequently attract growth investors. All this despite the wider less favourable market sentiment towards softer ‘tech’ startups operating in the consumer facing venture capital markets.

Why is deep tech not affected by wider market challenges?

Much has been written about the change in attitude and risk appetite around venture capital investing in recent times, with Michael Casey (Portico Advisors) writing in the FT: “Venture capital prospered in a magical decade that placed a premium value on storytelling”. But in fact, deep tech has been much less affected by this wider sentiment and change in attitude, largely because outlandish storytelling and rapid due diligence, are rarely part of the deep tech investment process.

Of course, deep tech companies come with technical risk, but once this is understood and overcome there only remains market demand risk, and once this is proven, companies often have much more defensibility than more generalist consumer technology companies who are frequently more harshly affected by current market sentiment.

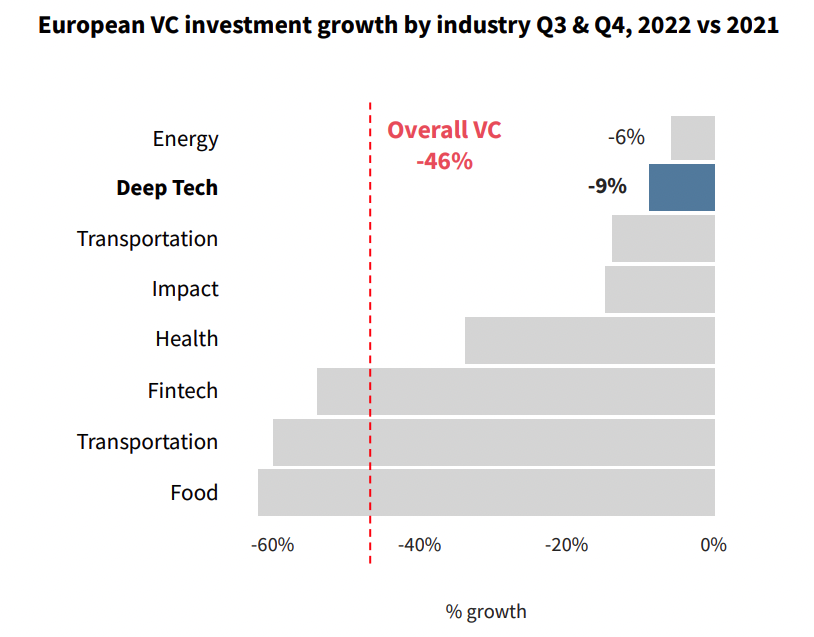

As reported recently by Dealroom, European deep tech funding only dropped 9% from 2021 to 2022, compared with FinTech (45%), Health Tech (35%) and Food Tech (60%) which saw a much larger drop in funding, caused by the wider acknowledgment of inflated valuations in overall VC (which dropped 46% in the same period).

So why doesn’t everyone invest in deep tech?

The ecosystem of deep tech co-investors is relatively small, often with only a handful of options available to entrepreneurs building solutions in complex and specialised markets. There are many reasons for this: the product-market fit journey for deep tech companies can be long and expensive; the technical due diligence involved requires a scientific understanding of the technology; and the network required for an investor to support deep tech companies effectively takes decades to build which is a real barrier for most generalist investors.

These are key reasons behind the way Bloc is structured. We’re a permanent capital company, made up of a complement of deeply technical and operationally experienced industry experts, with a strong network in the technology supply chain. This means we can confidently validate products, companies and entrepreneurs, whilst being able to back them for the long term (with no fund restrictions) and providing hands-on support as portfolio companies commercialise.

The UK ecosystem of deep tech investors is small but growing, with access to some of the best technical minds in the world and the potential to build globally competitive companies like AccelerComm.

So what makes deep tech attractive?

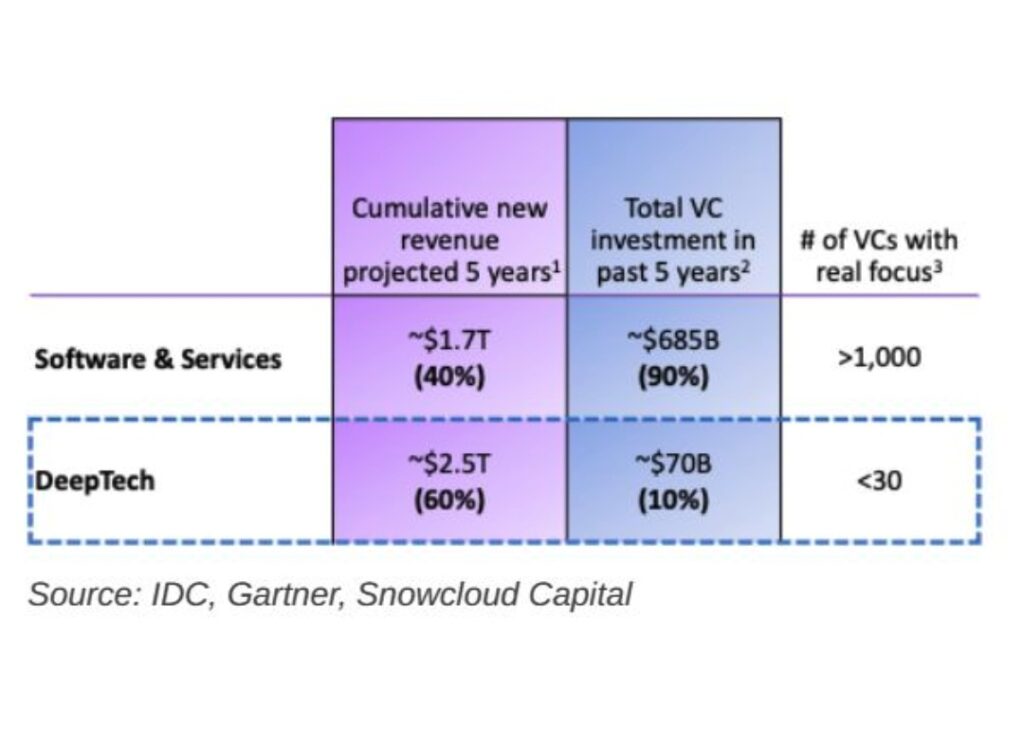

As shown in the table above, as an asset class, despite receiving only 10% of the world’s VC funding, deep tech companies (classified as hardware in the research) are well on a path to punch above their weight in proportion of revenue compared to generalist technology (classified as software in the research). A trend that’s accelerating with the advent of the hard deep tech that AI and Quantum are so reliant on.

The challenge for deep tech startups themselves remains an investor gap with less than 3% of VCs considered to have a real focus on deep tech and the operational expertise and networks necessary to scale them.

At Bloc, we’re always looking for new partners looking to invest in the world’s technology infrastructure, if that’s you please get in touch with our team.

‘Big Tech companies use cloud computing arms to pursue alliances with AI groups.’

YellowDog’s CEO was featured in the FT this week as he spoke to a journalist on the rise of generative AI and natural language models, with special focus on the impact on compute requirements and partnerships with compute providers.

The YellowDog platform enables AI companies to reduce cost by up to 90% and remain flexible by adopting a multi-cloud strategy, accessing compute resource from all major providers.

Here is the excerpt: Cloud management company YellowDog, which helps customers switch between cloud services, says it knows of several alliances between nascent AI companies that have yet to launch products and cloud providers, made at a stage when they are willing to tie themselves to a supplier and give up equity. “Some academics that want to move into their own start-up, their first conversation is with cloud providers before they even recruit developers because they know it’s impossibly expensive. It’s key,” said YellowDog’s CEO.

The full article can be found here: https://www.ft.com/content/5b17d011-8e0b-4ba1-bdca-4fbfdba10363

An introduction from our CTO

Whilst security is undoubtedly important, fundamentally it’s a business case based on the time-value depreciation of the asset being protected, which in general leads to a design principle of “it’s good enough” and/or “it will only be broken in a given timeframe”.

At the other extreme, history has given us many examples where reliance on theoretical certainty fails due to unknowns. One such example being the Titanic which was considered by its naval architects as unsinkable. The unknown being the iceberg!

It is a simple fact that weaker randomness leads to weaker encryption, and with the inexorable rise of compute power due to Moore’s law, the barriers to breaking encryption are eroding. And now with the advent of the quantum-era, cyber-crime is about to enter an age in which encryption when done less than perfectly (i.e. lacking true randomness) will no longer be ‘good enough’ and become ever more vulnerable to attack.

In the following, Bloc’s Head of Research David Pollington takes a deeper dive into the landscape of secure communications and how it will need to evolve to combat the threat of the quantum-era. Bloc’s research findings inform decisions on investment opportunities.

Setting the scene

Much has been written on quantum computing’s threat to encryption algorithms used to secure the Internet, and the robustness of public-key cryptography schemes such as RSA and ECDH that are used extensively in internet security protocols such as TLS.

These schemes perform two essential functions: securely exchanging keys for encrypting internet session data, and authenticating the communicating partners to protect the session against Man-in-the-Middle (MITM) attacks.

The security of these approaches relies on either the difficulty of factoring integers (RSA) or calculating discrete logarithms (ECDH). Whilst safe against the ‘classical’ computing capabilities available today, both will succumb to Shor’s algorithm on a sufficiently large quantum computer. In fact, a team of Chinese scientists have already demonstrated an ability to factor integers of 48bits with just 10 qubits using Schnorr’s algorithm in combination with a quantum approximate optimization to speed-up factorisation – projecting forwards, they’ve estimated that 372 qubits may be sufficient to crack today’s RSA-2048 encryption, well within reach over the next few years.

The race is on therefore to find a replacement to the incumbent RSA and ECDH algorithms… and there are two schools of thought: 1) Symmetric encryption + Quantum Key Distribution (QKD), or 2) Post Quantum Cryptography (PQC).

Quantum Key Distribution (QKD)

In contrast to the threat to current public-key algorithms, most symmetric cryptographic algorithms (e.g., AES) and hash functions (e.g., SHA-2) are considered to be secure against attacks by quantum computers.

Whilst Grover’s algorithm running on a quantum computer can speed up attacks against symmetric ciphers (reducing the security robustness by a half), an AES block-cipher using 256-bit keys is currently considered by the UK’s security agency NCSC to be safe from quantum attack, provided that a secure mechanism is in place for sharing the keys between the communicating parties – Quantum Key Distribution (QKD) is one such mechanism.

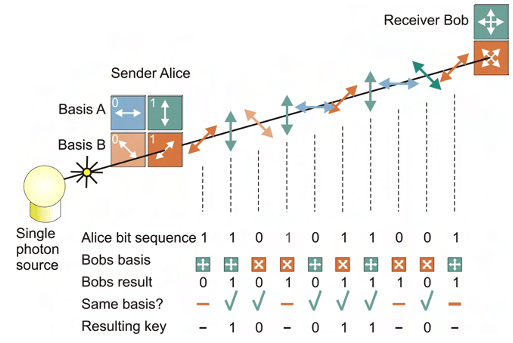

Rather than relying on the security of underlying mathematical problems, QKD is based on the properties of quantum mechanics to mitigate tampering of the keys in transit. QKD uses a stream of single photons to send each quantum state and communicate each bit of the key.

However, there are a number of implementation considerations that affect its suitability:

Integration complexity & cost

- QKD transmits keys using photons hence is reliant on a suitable optical fibre or free-space (satellite) optical link – this adds complexity and cost, precludes use in resource-constrained edge devices (such as mobile phones and IoT devices), and reduces flexibility in applying upgrades or security patches

Distance constraints

- A single QKD link over optical fibre is typically limited to a few 100 km’s with a sweet spot in the 20–50 km range

- Range can be extended using quantum repeaters, but doing so entails additional cost, security risks, and threat of interception as the data is decoded to classical bits before re-encrypting and transmitting via another quantum channel; it also doesn’t scale well for constructing multi-user group networks

- Alternative, greater range can be achieved via satellite links, but at significant additional cost

- A fully connected entanglement-based quantum communication network is theoretically possible without requiring trusted nodes, but is someway off commercialisation and will be dependent again on specialist hardware

Authentication

- A key tenet of public-key schemes is mutual authentication of the communicating parties – QKD doesn’t inherently include this, and hence is reliant on either encapsulating the symmetric key using RSA or ECDH (which, as already discussed, isn’t quantum-safe), or using pre-shared keys exchanged offline (which adds complexity)

- Given that the resulting authenticated channel could then be used in combination with AES for encrypting the session data, to some extent this negates the need for QKD

DoS attack

- The sensitivity of QKD channels to detecting eavesdropping makes them more susceptible to denial of service (DoS) attacks

Post-Quantum Cryptography (PQC)

Rather than replacing existing public key infrastructure, an alternative is to develop more resilient cryptographic algorithms.

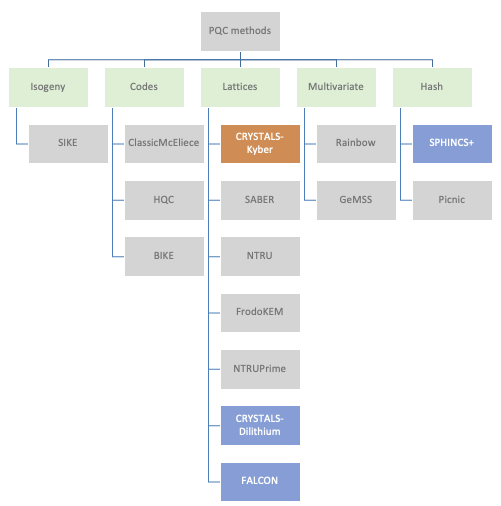

With that in mind, NIST have been running a collaborative activity with input from academia and the private sector (e.g., IBM, ARM, NXP, Infineon) to develop and standardise new algorithms deemed to be quantum-safe.

A number of mathematical approaches have been explored with a large variation in performance. Structured lattice-based cryptography algorithms have emerged as prime candidates for standardisation due to a good balance between security, key sizes, and computational efficiency. Importantly, it has been shown that lattice-based algorithms can be implemented on low-power IoT edge devices (e.g., using Cortex M4) whilst maintaining viable battery runtimes.

Four algorithms have been short-listed by NIST: CRYSTALS-Kyber for key establishment, CRYSTALS-Dilithium for digital signatures, and then two additional digital signature algorithms as fallback (FALCON, and SPHINCS+). SPHINCS+ is a hash-based backup in case serious vulnerabilities are found in the lattice-based approach.

NIST aims to have the PQC algorithms fully standardised by 2024, but have released technical details in the meantime so that security vendors can start working towards developing end-end solutions as well as stress-testing the candidates for any vulnerabilities. A number of companies (e.g., ResQuant, PQShield and those mentioned earlier) have already started developing hardware implementations of the two primary algorithms.

Commercial landscape

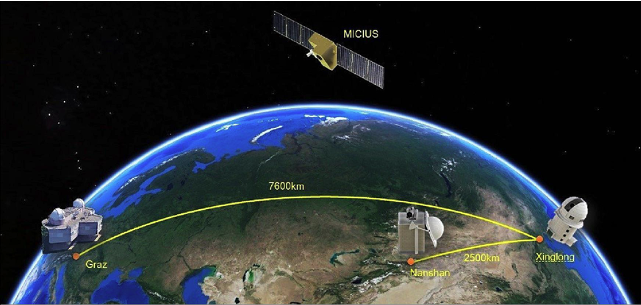

QKD has made slow progress in achieving commercial adoption, partly because of the various implementation concerns outlined above. China has been the most active, the QUESS project in 2016 creating an international QKD satellite channel between China and Vienna, and in 2017 the completion of a 2000km fibre link between Beijing and Shanghai. The original goal of commercialising a European/Asian quantum-encrypted network by 2020 hasn’t materialised, although the European Space Agency is now aiming to launch a quantum satellite in 2024 that will spend three years in orbit testing secure communications technologies.

BT has recently teamed up with EY (and BT’s long term QKD tech partner Toshiba) on a two year trial interconnecting two of EY’s offices in London, and Toshiba themselves have been pushing QKD in the US through a trial with JP Morgan.

Other vendors in this space include ID Quantique (tech provider for many early QKD pilots), UK-based KETS, MagiQ, Qubitekk, Quintessance Labs and QuantumCtek (commercialising QKD in China). An outlier is Arqit; a QKD supporter and strong advocate for symmetric encryption that addresses many of the QKD implementation concerns through its own quantum-safe network and has partnered with Virgin Orbit to launch five QKD satellites, beginning in 2023.

Given the issues identified with QKD, both the UK (NCSC) and US (NSA) security agencies have so far discounted QKD for use in government and defence applications, and instead are recommending post-quantum cryptography (PQC) as the more cost effective and easily maintained solution.

There may still be use cases (e.g., in defence, financial services etc.) where the parties are in fixed locations, secrecy needs to be guaranteed, and costs are not the primary concern. But for the mass market where public-key solutions are already in widespread use, the best approach is likely to be adoption of post-quantum algorithms within the existing public-key frameworks once the algorithms become standardised and commercially available.

Introducing the new cryptographic algorithms though will have far reaching consequences with updates needed to protocols, schemes, and infrastructure; and according to a recent World Economic Forum report, more than 20 billion digital devices will need to be upgraded or replaced.

Widespread adoption of the new quantum-safe algorithms may take 10-15 years, but with the US, UK, French and German security agencies driving the use of post-quantum cryptography, it’s likely to become defacto for high security use cases in government and defence much sooner.

Organisations responsible for critical infrastructure are also likely to move more quickly – in the telco space, the GSMA, in collaboration with IBM and Vodafone, have recently launched the GSMA Post-Quantum Telco Network Taskforce. And Cloudflare has also stepped up, launching post-quantum cryptography support for all websites and APIs served through its Content Delivery Network (19+% of all websites worldwide according to W3Techs).

Importance of randomness

Irrespective of which encryption approach is adopted, their efficacy is ultimately dependent on the strength of the cryptographic key used to encrypt the data. Any weaknesses in the random number generators used to generate the keys can have catastrophic results, as was evidenced by the ROCA vulnerability in an RSA key generation library provided by Infineon back in 2017 that resulted in 750,000 Estonian national ID cards being compromised.

Encryption systems often rely upon Pseudo Random Number Generators (PRNG) that generate random numbers using mathematical algorithms, but such an approach is deterministic and reapplication of the seed generates the same random number.

True Random Number Generators (TRNGs) utilise a physical process such as thermal electrical noise that in theory is stochastic, but in reality is somewhat deterministic as it relies on post-processing algorithms to provide randomness and can be influenced by biases within the physical device. Furthermore, by being based on chaotic and complex physical systems, TRNGs are hard to model and therefore it can be hard to know if they have been manipulated by an attacker to retain the “quality of the randomness” but from a deterministic source.Ultimately, the deterministic nature of PRNGs and TRNGs opens them up to quantum attack.

A further problem with TRNGs for secure comms is that they are limited to either delivering high entropy (randomness) or high throughput (key generation frequency) but struggle to do both. In practise, as key requests ramp to serve ever-higher communication data rates, even the best TRNGs will reach a blocking rate at which the randomness is exhausted and keys can no longer be served. This either leads to downtime within the comms system, or the TRNG defaults to generating keys of 0 rendering the system highly insecure; either eventuality results in the system becoming highly susceptible to denial of service attacks.

Quantum Random Number Generators (QRNGs) are a new breed of RNGs that leverage quantum effects to generate random numbers. Not only does this achieve full entropy (i.e., truly random bit sequences) but importantly can also deliver this level of entropy at a high throughput (random bits per second) hence ideal for high bandwidth secure comms.

Having said that, not all QRNGs are created equal – in some designs, the level of randomness can be dependent on the physical construction of the device and/or the classical circuitry used for processing the output, either of which can result in the QRNG becoming deterministic and vulnerable to quantum attack in a similar fashion to the PRNG and TRNG. And just as with TRNGs, some QRNGs can run out of entropy at high data rates leading to system failure or generation of weak keys.

Careful design and robustness in implementation is therefore vital – Crypta Labs have been pioneering in quantum tech since 2014 and through their research have designed a QRNG that can deliver hundreds of megabits per second of full entropy whilst avoiding these implementation pitfalls.

Summary

Whilst time estimates vary, it’s considered inevitable that quantum computers will eventually reach sufficient maturity to beat today’s public-key algorithms – prosaically dubbed Y2Q. The Cloud Security Alliance (CSA) have started a countdown to April 14 2030 as the date by which they believe Y2Q will happen.

QKD was the industry’s initial reaction to counter this threat, but whilst meeting the security need at a theoretical level, has arguably failed to address implementation concerns in a way which is cost effective, scalable and secure for the mass market, at least to the satisfaction of NCSC and NSA.

Proponents of QKD believe key agreement and authentication mechanisms within public-key schemes can never be fully quantum-safe, and to a degree they have a point given the recent undermining of Rainbow, one of the short-listed PQC candidates. But QKD itself is only a partial solution.

The collaborative project led by NIST is therefore the most likely winner in this race, and especially given its backing by both the NSA and NCSC. Post-quantum cryptography (PQC) appears to be inherently cheaper, easier to implement, and deployable on edge devices, and can be further strengthened through the use of advanced QRNGs. Deviating away from the current public-key approach seems unnecessary compared to swapping out the current algorithms for the new PQC alternatives.

Looking to the future

Setting aside the quantum threat to today’s encryption algorithms, an area ripe for innovation is in true quantum communications, or quantum teleportation, in which information is encoded and transferred via the quantum states of matter or light.

It’s still early days, but physicists at QuTech in the Netherlands have already demonstrated teleportation between three remote, optically connected nodes in a quantum network using solid-state spin qubits.

Longer term, the goal is to create a ‘quantum internet’ – a network of entangled quantum computers connected with ultra-secure quantum communication guaranteed by the fundamental laws of physics.

When will this become a reality? Well, as with all things quantum, the answer is typically ‘sometime in the next decade or so’… let’s see.

Pharrowtech expands into the UK and grows its management team to boost next generation wireless products. Global millimeter-wave wireless tech leader accelerates hiring and opens two new offices as it grows its product and development capabilities.

Pharrowtech, a growing market leader in mmWave solutions for next-generation wireless applications, has opened its first UK design centre in the Thames Valley area. The UK office will be led by Dr Mehul (Micky) Mehta, and it will help bolster the company’s resources and talent pool as it grows its product offering. The news comes as Pharrowtech continues to hire at pace, including the recent acquisition of two significant new management hires.

In addition to Dr Mehta’s appointment in the UK, Claudia Bastian joins as Global HR Manager based in Leuven, Belgium. Pharrowtech’s Leuven office has moved to Philipssite to accommodate the company’s growing team and operations. It boasts a new state-of-the-art mmWave laboratory with the latest equipment, including the most advanced mmWave network analyzer technology, several anechoic chambers, and chip characterization and qualification setups.

The opening of Pharrowtech’s UK office and the move to a new headquarter office in Belgium quadruples the company’s office and lab capabilities. It is an essential component in Pharrowtech’s growth, as it continues to develop complete solutions for next generation wireless applications and expands its capabilities to serve even more commercial markets. The UK office is now actively seeking engineers with skills in digital modem design, real-time embedded software and digital silicon.

Dr Mehul Mehta, Vice President of Pharrowtech UK, has more than two decades’ experience of working in technical and leadership roles in the wireless communications industry, having most recently served as CEO of Celestia Technologies Group. He is an expert in physical and MAC layer topics – with specialities in areas including radio (wireless) communications and digital design. To date, he has developed several solutions for 3GPP (UMTS, LTE), IEEE 802.11 (WLAN) and IEEE 802.16 (WiMAX) standards, has authored numerous journal and conference papers and holds 10 patents.

Claudia Bastian, Global HR Manager, Pharrowtech, brings over 30 years of international experience at global technology businesses including DellEMC and OneSpan. She will spearhead Pharrowtech’s international recruitment drive, working to attract and retain the right technical talent.

Wim Van Thillo, CEO and co-founder, Pharrowtech comments, “The last two years have been a period of rapid progress for us, with product launches, significant investments, and successful trials with leading companies in the wireless industry. We are now focused on building our world-class global team of experts to fulfil our ambitions and deliver innovative technology that meets the needs of next generation wireless applications. With its well-established history in wireless systems and silicon design, the UK is an ideal location for the next phase in our growth.”

Dr Mehul Mehta comments, “This is an exciting time for the wireless industry and Pharrowtech stood out to me as a company truly at the forefront of it. Pharrowtech’s team of experts has the potential to make some of the most advanced wireless solutions a reality, to benefit people everywhere. I’m delighted to be joining the company and to help lead this next period of expansion.”

Claudia Bastian adds, “Pharrowtech is a true pioneer in the wireless communications industry and creating a talented team with the best technical expertise will be crucial to our success. I’m looking forward to the challenge of identifying these individuals worldwide to accelerate the company’s growth.”

Those interested in receiving more information about open roles or applying, should visit: https://pharrowtech.com/careers

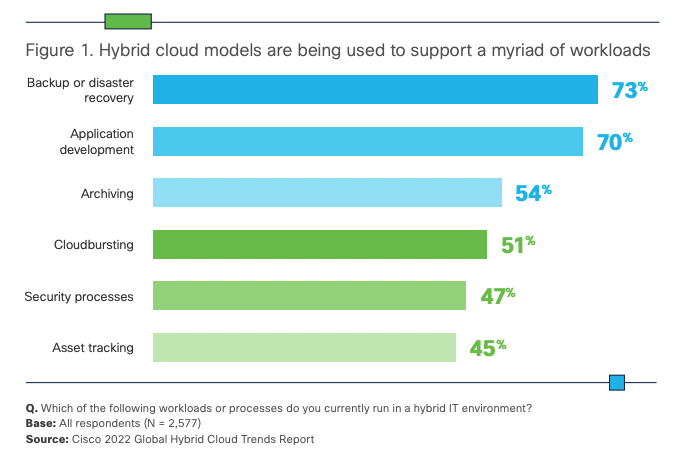

The computing landscape is one of constant change and the move to cloud has arguably been the most transformative in recent years. Early concerns around security have given way to adoption – according to Cisco’s (2022), 82% of businesses now routinely use hybrid cloud. Ironically they found it’s often security concerns driving a hybrid-cloud approach by giving teams the ability to selectively place workloads in public clouds while keeping others on-prem, or using different regions to meet data residency requirements.

But with players such as AWS, GCP and Azure creating a stranglehold on the market, there is growing awareness and a movement away from becoming too dependent on any single Cloud Service Provider (CSP), instead taking a multi-cloud approach.

Decentralisation is currently du jour in many aspects of the online world, most notably in finance (DeFi), and is starting to gain attention in the compute space through companies such as StorJ, Akash and Threefold – in essence, a blockchain-enabled approach that harnesses distributed compute & storage and will no doubt contribute to the Web3 scaffolding that underpins the future metaverse.

But decentralisation is a radical approach, and only suited to particular applications. For most enterprises today, the focus is on successfully migrating their apps into the cloud, and employing services from multiple CSPs to mitigate the dangers of becoming overly reliant on any single provider. But as many are discovering, taking a multi-cloud approach brings its own complications.

This article looks at some of the considerations and challenges that enterprises face when migrating to multi-cloud, and the resources that are out there to help them.

Cloud exuberance is over

Much has been said regarding the benefits of migrating enterprise apps to the cloud: more agility and flexibility in gaining access to resources as and when needed; an ability to scale rapidly in accordance with business needs; enabling apps hosted on-prem to burst into the cloud to accelerate workload completion time and/or generate insights with more depth and accuracy.

But it’s not all plain sailing, the hype surrounding the cloud often hides a number of drawbacks that have resulted in many businesses failing to realise the benefits expected – a recent study by Accenture Research found that only one in three companies reported achieving their cloud aims.

“Lift and shift” of legacy apps to the cloud doesn’t always work due to issues around data gravity, sovereignty, compliance, cost, and interdependencies; or perhaps because the app itself has been optimised to a specific hardware and OS used on-prem that isn’t readily available at scale in the cloud. This problem is further exacerbated by enterprises needing to move to a multi-cloud architecture.

Many believe that utilising cloud resources has a lower total cost of ownership than operating on-prem. But this doesn’t always materialise and depends on the type of systems, apps and workloads that are being considered for migration.

In the case of high performance compute (HPC) which is increasing in importance for deep learning models, simulations and complex business decisioning, enterprises running these tasks on their own infrastructure commonly dimension for high utilisation (70-90%) whereas pricing in the cloud is often orientated towards SaaS-based apps where hardware utilisation is typically <20%.

For many enterprises therefore, embarking on a programme of modernisation often results in getting caught in the middle, struggling to reach their transformation goals amidst a complex dual operating environment with some systems migrated to the cloud whilst others by necessity stay on-prem.

Optimising workloads for the cloud

For those workloads that are migrated to the cloud, delivering on the cost & performance targets set by the enterprise will be dependent on real-time analysis of workload snapshots, careful selection of the most appropriate instance types, and optimisation of the workloads to the instances that are ultimately used.

Achieving this requires a comprehensive understanding of all the compute resources available across the CSPs (assuming a multi-cloud approach), being able to select the best resource type(s) and number of instances for a given workload and SLA requirements (resilience, time, budget). In addition, where spot/pre-emptible instances are leveraged, workload data needs to be replicated between the CSPs and locations hosting the spot instances to ensure availability.

Once the target instance types are known, workload performance can be tuned using tools such as Granulate that optimise OS-level scheduling and resource management to improve performance (up to 40-60%), especially for those instances leveraging new silicon.

Similarly, companies such as CloudFix help enterprises ensure their AWS instances are auto-updated with the latest patches to deliver a more compliant cloud that performs better and costs less by removing the effort of applying manual fixes.

Spot instances offered by the CSPs at a discount are ideal for loosely coupled HPC workloads, and often instrumental in helping enterprises hit their targets on performance and cost; but navigating the vast array of instance types and pricing models is far from trivial.

Moreover, prices often fluctuate based on demand, availability, and region. 451 Research’s Cloud Price Index (CPI) for instance recorded more than 1.2 million service changes in 2021 (SKUs added, SKUs removed, price increases and price decreases).

So whilst spot instances can help with budgetary targets and economic viability for HPC workloads, juggling instances to optimise cost and break-even point between reserved instances, on-demand, and spot/preemptible instances, versus retaining workloads on-prem, can become a real challenge for teams to manage.

Furthermore, with spot prices fluctuating frequently and resources being reclaimed with little notice by the CSP, teams need to closely monitor cloud usage, throttling down workloads when pricing rises above budget, migrating workloads when resources are reclaimed, and tearing down resources when they’re no longer needed. This can soon become an operational and administrative nightmare.

Cloud Management Platforms

Cloud Management Platforms (CMP) aim to address this with a set of tools for streamlining operations and enabling cloud resources to be utilised more effectively.

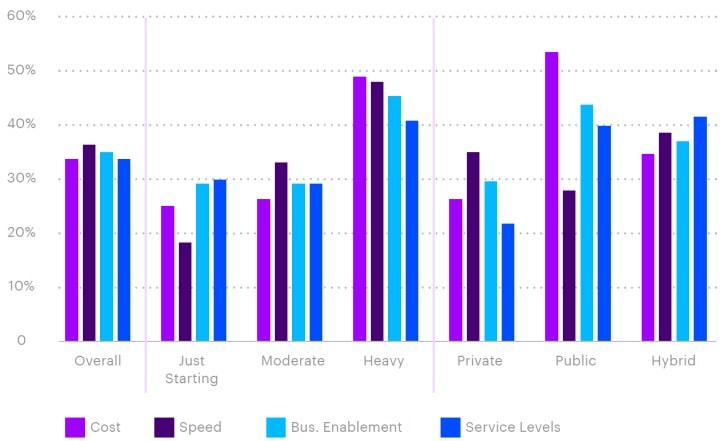

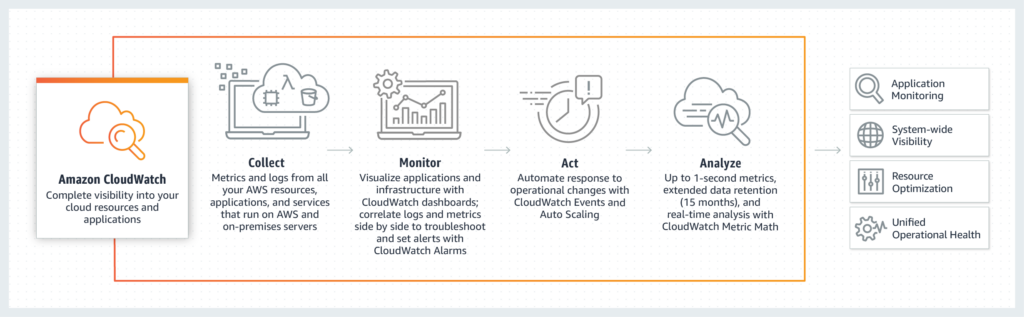

Whilst it’s true that CSPs provide such tools to aid their customers (such as AWS CloudWatch), they are proprietary in nature and vary in functionality, complicating the situation for any enterprise with multi-cloud deployments – in fact, Cisco found that a third of responding organisations highlighted operational complexity as a significant concern when adopting hybrid or multi-cloud models.

This is where CMPs come in, providing a “unified” experience and smoothing out the differences when working with multiple CSPs.

Such platforms provide an ability to:

- create clusters of mixed instance types to avoid provider lock-in and circumvent constraints imposed by any single CSP when dealing with large clusters

- manage workloads and resource provisioning simultaneously to maximise efficiency

- track progress and scheduling of all workloads via a single dashboard

- dynamically change prioritisation or allocation of resources to deliver results on time and within budget

- monitor when workloads start and complete to ensure that resources are not left running

CMPs achieve this by leveraging the disparate resources and tooling of the respective cloud providers to deliver a single homogenised set of resources for use by the enterprise’s apps.

Moreover, by unifying all elements of provisioning, scheduling and cost management within a single platform, they enable a more collaborative working relationship between teams within the organisation (FinOps). FinOps has demonstrated a reduction in cloud spend by 20-30% by empowering individual teams to manage their cloud usage whilst enabling better alignment with business metrics and strategic decision-making.

Introducing YellowDog

YellowDog is a leader in the CMP space with a focus on enterprises seeking a mix of public, private and on-prem resources for HPC workloads.

In short, the YellowDog platform combines intelligent orchestration, scheduling and dynamic policy-driven provisioning at scale across on-prem, hybrid and multi-cloud environments using agent technology. The platform has applicability ranging from containerised workloads through to supporting bare-metal servers without a hypervisor.

Compute resources are formulated into “on-demand” clusters and abstracted through the notion of workers (threads on instances). YellowDog’s Workload Manager is a cloud native scheduler that scales beyond existing technologies to millions of processor cores, working across all the CSPs, multi-region, multi-datacentre and multi-instance shapes.

It can utilise Spot type instances where others can’t, acting as both a native scheduler and meta-scheduler (invoking 3rd party technologies and creating specific workload environments such as Slurm, OpenMPI etc.) to work with both loosely coupled and tightly coupled workloads.

YellowDog’s workload manager matches workload demand with the supply of workers whilst ensuring compatibility (via YellowDog’s extensive Image Registry) and automatically reassigning workloads in case of instance removal – effectively it is “self-healing”, automatically provisioning and deprovisioning instances to match the workload queue(s).

The choice of which workers to choose is managed by the enterprise through a set of compute templates defining workload specific compute requirements, data compliance restrictions and enterprise policy on use of renewables etc. Compute templates can also be attribute-driven via live CSP information (price, performance, geographic region, reliability, carbon footprint etc.), and potentially in future with input from CPU and GPU vendors (e.g., to help optimise workloads to new silicon).

On completion, workload output can be captured in YellowDog’s Object Store Service for subsequent analysis and collection or as input to other workloads. By combining multiple storage providers (e.g. Azure Blob, Amazon S3, Google Cloud Storage) into one coherent data surface, YellowDog mitigate the issue of data gravity and ensure that data is in the right place for use within a workload. YellowDog also supports the use of other file storage technologies (e.g. NFS, Lustre, BeeGFS) for data seeding and management.

In addition, the enterprise can define pipelines that are automatically triggered when a new file is uploaded into an object store that spin up instances and work on the new file, and then shut down when the work is completed.

As jobs are running, YellowDog enables different teams to monitor their individual workloads with real-time feedback on progress and status, as well as providing an aggregate view and ability to centrally manage quotas or allowances for different clouds, users, groups and so forth.

In summary

Multi-cloud is becoming the norm, with businesses typically using >2 different providers. In fact Cisco found that 58% of those surveyed use 2-3 CSPs for their workloads, with 31% using more than 4. Effective management of these multi-cloud environments will be paramount to ensuring future enterprise growth. Cloud Management Platforms, such as that offered by YellowDog, will play an important role in helping enterprises to maximise their use of hybrid / multi-cloud.

The focus of AI and ML innovation to-date has understandably been in those areas characterised by an abundance of labelled data with the goal of deriving insights, making recommendations and automating processes.

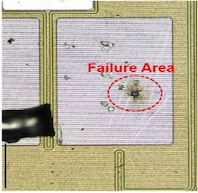

But not every potential application of AI produces enough labelled data to utilise such techniques – use cases such as spotting manufacturing defects on a production line is a good example where images of defects (for training purposes) are scarce and hence a different approach is needed.

Interest is now turning within academia and AI labs to the harder class of problems in which data is limited or more variable in nature, requiring a different approach. Techniques include: leveraging datasets in a similar domain (few-shot learning), auto-generating labels (semi-supervised learning), leveraging the underlying structure of data (self-supervised learning), or even synthesising data to simulate missing data (data augmentation).

Characterising limited-data problems

Deep learning using neural networks has become increasingly adept at performing tasks such as image classification and natural language processing (NLP), and seen widespread adoption across many industries and diverse sectors.

Machine Learning is a data driven approach, with deep learning models requiring thousands of labelled images to build predictive models that are more accurate and robust. And whilst it’s generally true that more data is better, it can take much more data to deliver relatively marginal improvements in performance.

Figure 1: Diminishing returns of two example AI algorithms [Source: https://medium.com/@charlesbrun]

Manually gathering and labelling data to train ML models is expensive and time consuming. To address this, the commercial world has built large sets of labelled data, often through crowd-sourcing and through specialists like iMerit offering data labelling and annotation services.

But such data libraries and collection techniques are best suited to generalist image classification. For manufacturing, and in particular spotting defects on a production line, the 10,000+ images required per defect to achieve sufficient performance is unlikely to exist, the typical manufacturing defect rate being less than 1%. This is a good example of a ‘limited-data’ problem, and in such circumstances ML models tend to overfit (over optimise) to the sparse training data, hence struggle to generalise to new (unknown) images and end up delivering poor overall performance as a result.

So what can be done for limited-data use cases?

A number of different techniques can be used for addressing these limited-data problems depending on the circumstances, type of data and the amount of training examples available.

- Few-shot learning

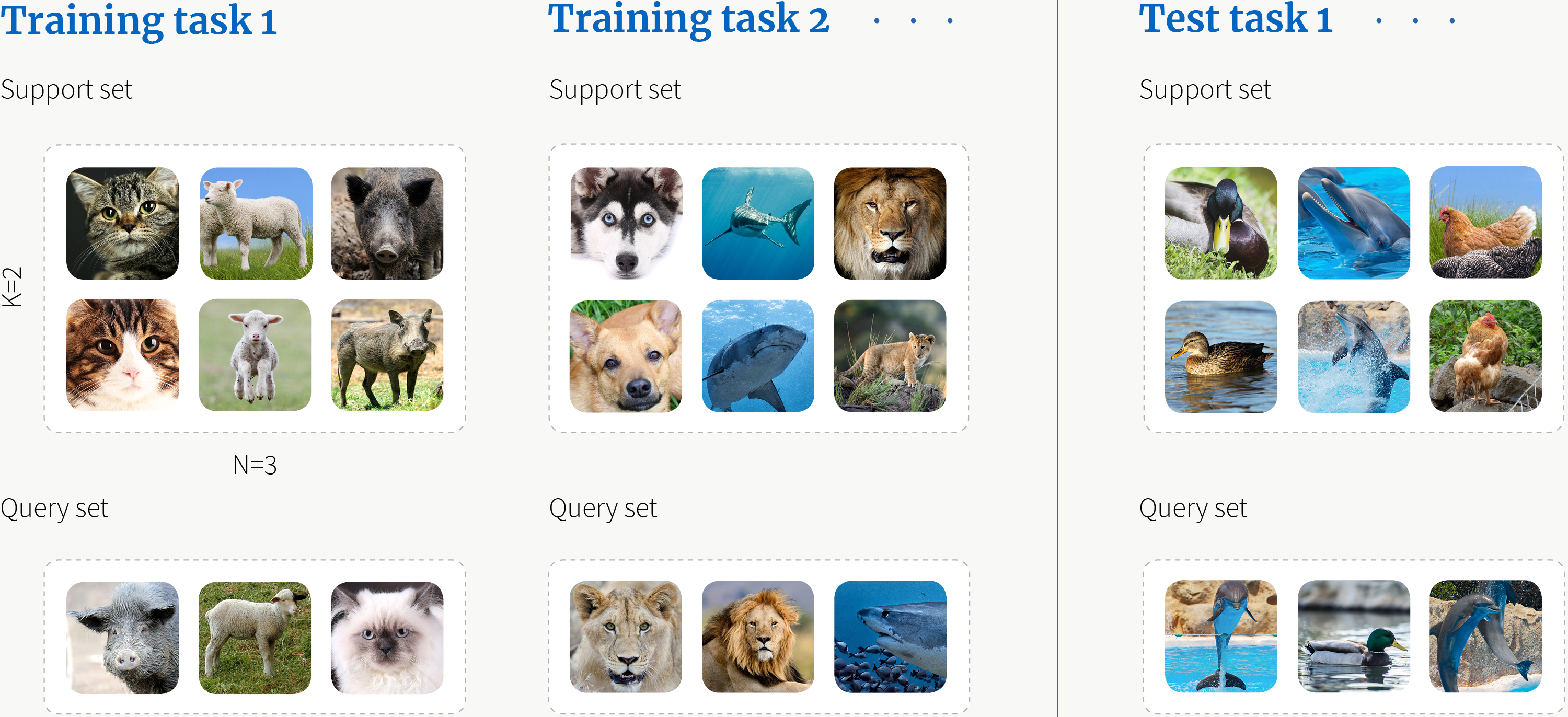

Few-shot learning is a set of techniques that can be used in situations where there are only a few example images (shots) in the training data for each class of image (e.g. dogs, cats). The fewer the examples, the greater the risk of the model overfitting (leading to poor performance) or adversely introducing bias into the model’s predictions. To address this issue, few-shot learning leverages a separate but related larger dataset to (pre)train the target model.

Three of the more popular approaches are meta-learning (training a meta-learner to extract generalisable knowledge), transfer learning (utilising shared knowledge between source and target domains) and metric learning (classifying an unseen sample based on its similarity to labelled samples).

Once a human has seen one or two pictures of a new animal species, they’re pretty good at recognising that animal species in other images – this is a good example of meta-learning. When meta-learning is applied in the context of ML, the model consecutively learns how to solve lots of different tasks, and in doing so becomes better at learning how to handle new tasks; in essence, ‘learning how to learn’ similar to a human – illustrated below:

Figure 2: Meta-learning [Source: www.borealisai.com]

Transfer learning takes a different approach. When training ML models, part of the training effort involves learning how to extract features from the data; this feature extraction part of the neural network will be very similar for problems in similar domains, such as recognising different animal species, and hence can be used in instances where there is limited data.

Metric learning (or distance metric learning) determines similarity between images based on a distance metric and decides whether two images are sufficiently similar to be considered the same. Deep metric learning takes the approach one step further by using neural networks to automatically learn discriminative features from the images and compute the distance metric based on these features – very similar in fact to how a human learns to differentiate animal species.

- Self-supervised & semi-supervised learning

Techniques such as few-shot learning can work well in situations where there is a larger labelled dataset (or pre-trained model) in a similar domain, but this won’t always be the case.

Semi-supervised learning can address this lack of sufficient data by leveraging the data that is labelled to predict labels for the rest hence creating a larger labelled dataset for use in training. But what if there isn’t any labelled data? In such circumstances, self-supervised learning is an emerging technique that sidesteps the lack of labelled data by obtaining supervisory signals from the data itself, such as the underlying structure in the data.

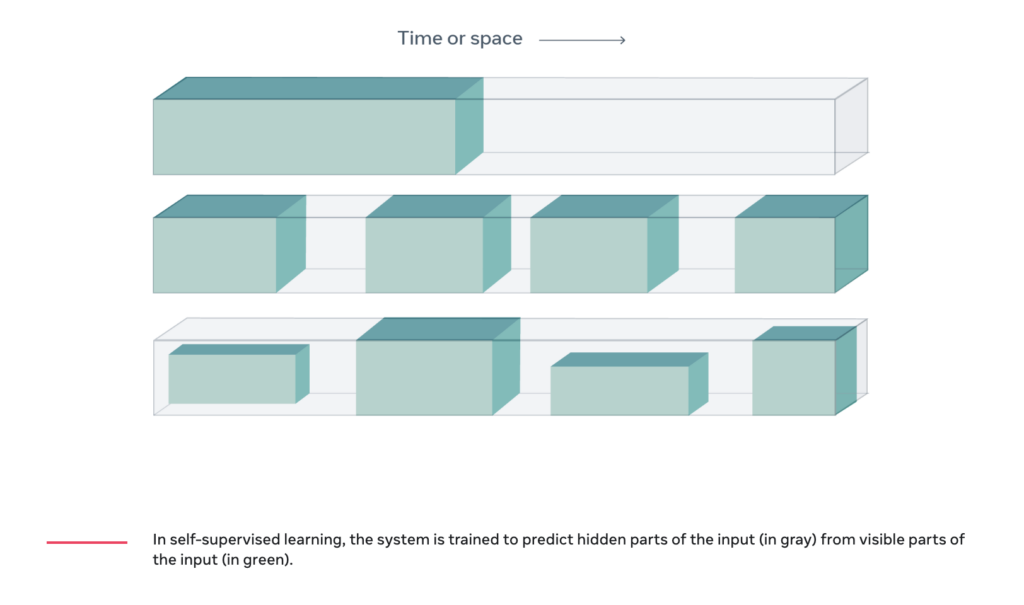

Figure 3 Predicting hidden parts of the input (in grey) from visible parts (in green) using self-supervised learning [source: metaAI]

- Data augmentation

An alternate approach is simply to fill the gap through data augmentation by simulating real-world events and synthesising data samples to create a sufficiently large dataset for training. Such an approach has been used by Tesla to complement the billions of real-world images captured via its fleet of autonomous vehicles for training their AI algorithms, and by Amazon within their Amazon’s Go stores for determining which products each customer is taking from the shelves.

Figure 4: An Amazon Go store [Source: https://www.aboutamazon.com/what-we-do]

Whilst synthetic data might seem like a panacea for any limited-data problem, it’s too costly to simulate for every eventuality, and it’s impractical to predict anomalies or defects a system may face when put into operation.

Data augmentation has the potential to reinforce any biases that may be present in the limited amount of original labelled data, and/or causing overfitting of the model by creating too much similarity within the training samples such that the model struggles to generalise to the real-world.

Applying these techniques to computer vision

Mindtrace is utilising the unsupervised and few-shot learning techniques described previously to deliver a computer vision system that is especially adept in environments characterised by limited input data and where models need to adapt to changing real-life conditions.

Pre-trained models bringing knowledge from different domains create a base AI solution that is fine-tuned from limited (few-shot) or unlabelled data to deliver state-of-the-art performance for asset inspection and defect detection.

Figure 6: Mindtrace [Source: https://www.mindtrace.ai]

This approach enables efficient learning from limited data, drastically reducing the need for labelled data (by up to 90%) and the time / cost of model development (by a factor of 6x) whilst delivering high accuracy.

Furthermore, the approach is auto-adaptive, the models continuously learn and adapt after deployment without needing to be retrained, and are better able to react to changing circumstances in asset inspection or new cameras on a production line for detecting defects, for example.

The solution is also specifically designed for deployment at the edge by reducing the size of the model through pruning (optimal feature selection) and reducing the processing and memory overhead via quantisation (reducing the precision using lower bitwidths).

Furthermore, through a process of swarm learning, insights and learnings can be shared between edge devices without having to share the data itself or process the data centrally, hence enabling all devices to feed off one-another to improve performance and quickly learn to perform new tasks (Bloc invested in Mindtrace in 2021).

In summary

The focus of AI and ML innovation to-date has understandably been in areas characterised by an abundance of labelled data to derive insights, make recommendations or automate processes.

Increasingly though, interest is turning to the harder class of problems with data that is limited and dynamic in nature such as the asset inspection examples discussed. Within Industry 4.0, limited-data ML techniques can be used by autonomous robots to learn a new movement or manipulation action in a similar way to a human with minimal training, or to auto-navigate around a new or changing environment without needing to be re-programmed.

Limited-data ML is now being trialled across cyber threat intelligence, visual security (people and things), scene processing within military applications, medical imaging (e.g., to detect rare pathologies) and smart retail applications.

Mindtrace has developed a framework that can deliver across a multitude of corporate needs.

Figure 7: Example Autonomous Mobile Robots from Panasonic [Source: Panasonic]